QC Scope is a plugin for FIJI designed to process microscope images for Quality Control purposes.

Written by Nicolas Stifani from the Centre d'Innovation Biomédicale de la Faculté de Médecine de l'Université de Montréal.

This page provides a practical guide for microscope quality control. By following the outlined steps, utilizing the provided template files, and running the included scripts, you will have everything needed to easily generate comprehensive report on your microscope's performance.

Equipment used

- Thorlabs Power Meter (PM400) and sensor (S170C)

- Thorlabs Fluorescent Slides (FSK5)

- TetraSpeck™ Fluorescent Microspheres Size Kit (mounted on slide) ThermoFisher (T14792)

Software used

- FIJI FIJI

QC Scope Plugin for FIJI

- MetroloJ_QC Plugin for FIJI

- iText Plugin for FIJI (required for MetroloJ_QC)

- R from the CRAN R Project

- I typically use RStudio the integrated development environment (IDE) for R

- Bulk Rename Utility s a powerfull mini software to rename your files.

Excel Templates and Scripts

Please note that during quality control, you may, and likely will, encounter defects or unexpected behavior. This practical guide is not intended to assist with investigating or resolving these issues. With that said, we wish you the best of luck and are committed to providing support. Feel free to reach out to us at microscopie@cib.umontreal.ca

Illumination Warmup Kinetic

When starting light sources, they require time to reach a stable and steady state. This duration is referred to as the warm-up period. To ensure accurate performance, it is essential to record the warm-up kinetics at least once a year to precisely define this period. For a detailed exploration of illumination stability, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Acquisition protocol

Results

Conclusion

Illumination Maximum Power Output

This measure assesses the maximum power output of each light source, considering both the quality of the light source and the components along the light path. Over time, we expect a gradual decrease in power output due to the aging of hardware, including the light source and other optical components. These measurements will also be used to track the performance of the light sources over their lifetime (see Long-Term Illumination Stability section). For a detailed exploration of illumination properties, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Acquisition protocol

Results

Conclusion

Illumination Stability

The light sources used on a microscope should remain constant or at least stable over the time scale of an experiment. For this reason, illumination stability is recorded across four different time scales:

- Real-time Illumination Stability: Continuous recording for 1 minute. This represents the duration of a z-stack acquisition.

- Short-term Illumination Stability: Recording every 1-10 seconds for 5-15 minutes. This represents the duration needed to acquire several images.

- Mid-term Illumination Stability: Recording every 10-30 seconds for 1-2 hours. This represents the duration of a typical acquisition session or short time-lapse experiments. For longer time-lapse experiments, a longer duration may be used.

- Long-term Illumination Stability: Recording once a year or more over the lifetime of the instrument.

For a detailed exploration of illumination stability, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Real-time Illumination Stability

Acquisition protocol

Results

Conclusion

Short-term Illumination Stability

Acquisition protocol

Results

Conclusion

Mid-term Illumination Stability

Acquisition protocol

Results

Conclusion

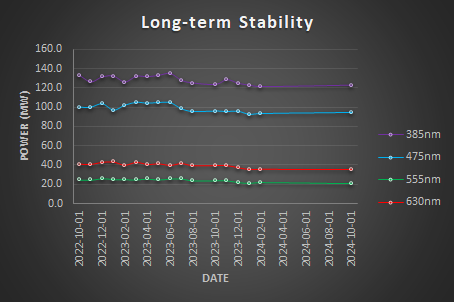

Long-term Illumination Stability

Long-term illumination stability measures the power output over the lifetime of the instrument. Over time, we expect a gradual decrease in power output due to the aging of hardware, including the light source and other optical components. These measurements are not an experiment per se but it is the measurement of the maximum power output over time.

Acquisition protocol

Results

Conclusion

Illumination Stability Conclusions

| Stability Factor | Real-time 1 min | Short-term 15 min | Mid-term 1 h |

385nm | 99.99% | 100.00% | 99.98% |

475nm | 99.99% | 100.00% | 99.98% |

555nm | 99.97% | 100.00% | 99.99% |

630nm | 99.99% | 99.99% | 99.97% |

The light sources are highly stable (Stability >99.9%).

Metrics

- The Stability Factor indicates a higher stability the closer to 100% and focuses specifically on the range of values (difference between max and min) relative to their sum, providing an intuitive measure of how tightly the system's behavior stays within a defined range.

- The Coefficient of Variation focuses on the dispersion of all data points (via the standard deviation) relative to the mean. Lower Coefficient indicates a better stability around the mean.

Illumination Input-Output Linearity

This measure compares the power output as the input varies. A linear relationship is expected between the input and the power output. For a detailed exploration of illumination linearity, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Acquisition protocol

Results

Conclusion

Objectives and Cubes Transmittance

Since we are using a power meter, we can easily assess the transmittance of the objectives and filter cubes. This measurement compares the power output when different objectives and filter cubes are in the light path. It evaluates the transmittance of each objective and compares it with the manufacturer’s specifications. This method can help detect defects or dirt on the objectives. It can also verify the correct identification of the filters installed in the microscope.

Objectives Transmittance

Acquisition protocol

Results

Conclusion

Cubes Transmittance

Acquisition protocol

Results

Conclusion

We are done with the powermeter .

Field Illumination Uniformity

Having confirmed the stability of our light sources and verified that the optical components (objectives and filter cubes) are transmitting light effectively, we can now proceed to evaluate the uniformity of the illumination. This step assesses how evenly the illumination is distributed. For a comprehensive guide on illumination uniformity, refer to the Illumination Uniformity by the QuaRep Working Group 03.

Acquisition protocol

Processing

Results

Conclusion

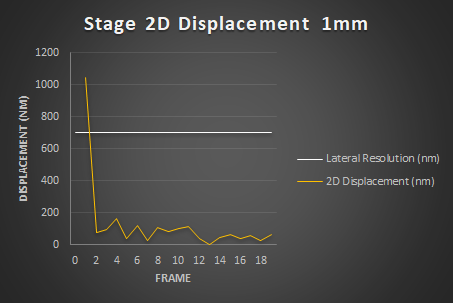

XYZ Drift

This experiment evaluates the stability of the system in the XY and Z directions. As noted earlier, when an instrument is started, it requires a warmup period to reach a stable steady state. To determine the duration of this phase accurately, it is recommended to record a warmup kinetic at least once per year. For a comprehensive guide on Drift and Repositioning, refer to the Stage and Focus Precision by the QuaRep Working Group 06.

Acquisition protocol

Processing

Results

Conclusion

Stage Repositioning Dispersion

This experiment evaluates how accurate is the system in XY by measuring the dispersionof repositioning. Several variables can affect repositioning: i) Time, ii) Traveled distance, iii) Speed and iv) acceleration. For a comprehensive guide on Stage Repositioning, refer to the Stage and Focus Precision by the QuaRep Working Group 06 and the associated XY Repositioning Protocol.

Acquisition protocol

Processing

Results

Conclusion

Further Investigation

Channel Co-Alignment

Channel co-alignment or co-registration refers to the process of aligning image data collected from multiple channels. This ensures that signals originating from the same location in the sample are correctly overlaid. This process is essential in multi-channel imaging to maintain spatial accuracy and avoid misinterpretation of co-localized signals. For a comprehensive guide on Channel Co-Registration, refer to the Chromatic aberration and Co-Registration the QuaRep Working Group 04.

Acquisition protocol

Processing

Results

Conclusion

Legend (Wait for it)...

For a comprehensive guide on Detectors, refer to the Detector Performances of the QuaRep Working Group 02.

Acquisition protocol

Results

Conclusion

Legend (Wait for it...) dary

For a comprehensive guide on Lateral and Axial Resolution, refer to the Lateral and Axial Resolution of the QuaRep Working Group 05.

Acquisition protocol

Results

Conclusion

A microscope can capture a defined area of a sample. This area is called Field-of-View (FOV) and depends on the optical configuration and microscope acquisition device. This is a limiting feature of microscopy. To be able to observe with a higher resolution the total visualized area is reduced. This can be an issue when trying to visualize feature that are bigger than the FOV.

One way to deal with this issue is to acquire multiple images and stitch them together after acquisition. Instead of acquiring adjacent FOV it is best to have partially overlapping regions. These regions will help to stitch images together.

While many softwares provide proprietary stitching solution we will focus here on the free and versatile plugin for ImageJ named Grid Collection Stitching.

Developed by Stephan Preibisch, this plugin is also part of FIJI distribution of ImageJ.

Stitching process

Stitching usually occurs in 3 steps:

- The first is the "layout" which finds the adjacent images for each given image. This step approximatively place the images in relation to each other.

- The second step finely transform (rotation, translation) one image to the adjacent ones. It matches detected features in one image to the same feature in the adjacent image

- The last step blends the images so the results appears smooth

Layout

Three pieces of information can be used to define the layout.

1. Images metadata

Modern microscopes use motorized stages to move the sample in X and Y. These coordinates can be stored in the image metadata and used during stitching. Knowing the approximate position of each tile greatly help stitching as you just have to compute the fine matching between the different images.

2. Tiles configuration and acquisition order

if you have 25 images (Image 1, Image 2,....) and know that it comes from a 5 x 5 acquisition from the top left to the bottom righ, by row from left to right; then you can quickly place your images to their approximate positions. Sometimes the tile configuration is directly saved into the file names (Image X1Y1, Image X2Y1 etc.), this can also be used to define the approximate tile layout

3. Images themselves

The data in the image can also be used to define the layout. It requires computing power as it usually parse all possible pairwise combination and compute a correlation coefficient. It then matches images with highest correlation.

Transformation

Once the layout is defined, the images need to be finely adjusted one to another. Because microscopes are not perfect some translation and rotation can be used to finely match identified features in adjacent images. To do this images are usually acquired with a 10 to 20% overlapping region. This region will be used to finely match adjacent images.

Blending

A blending can be applied to the overlapping region to ensure a smooth tiled result.

Protocol

- Open up FIJI

- Open the Grid/Collection stitching plugin Menu Plugins>Stitching>Grid/Collection stitching

Your files are saved under Tile_x001_y001.tif, you know the grid size and the percentage overlap

- Type: Filename defined position

- Order: Defined by filename

- Click OK

- Indicate the grid size (for example 5x5 if you have 25 images)

- Under directory click Browse

- Select the folder containing your files

- Click Choose

- Under file names for tiles Tile_x{xxx}_y{yyy}.tif

Several options are available I recommend using the following:

- Add tiles as ROIs (to check tiling quality

- Compute Overlap

- Display fusion

Your files are saved under Tile_001.tif, you know the grid size, the acquisition order and the percentage overlap

- Type: Grid: Column by column

- Order: Down & Right

- Click OK

- Indicate the grid size (for example 5x5 if you have 25 images)

- Under directory click Browse

- Select the folder containing your files

- Click Choose

- Under file names for tiles Tile_{iii}.tif

Several options are available I recommend using the following:

- Add tiles as ROIs (to check tiling quality

- Compute Overlap

- Display fusion

- Subixel accuracy

Your are capturing images with a manual stage. If you read this before the acquisition I would suggest to acquire your tiles using a given size and scheme (for example 3x3 snake horizontal right). This will allow to use the process above (except Type Grid: Snake by rows). Make sure to have some overlap between images to be able to finely place them. Since you are probably reading this after your acquisition you would have file saved as Tile_001.tif... but you do not know the grid size or the acquisition order nor the percentage overlap

- Type: Unknown position

- Order: All files in Directory

- Click OK

- Under directory click Browse

- Select the folder containing your files

- Check Confirm files

Several options are available I recommend using the following:

- Add tiles as ROIs (to check tiling quality

- Ignore Z stage position

- Subpixel accuracy

- Display fusion

- Computation parameters: Save computation time

If you choose Type: Grid the plugin except sequential continuous files i i+1 etc...

If you choose Positions from File the plugin expect one single file multiseries file. To Combine several files into one single T series, open the images individually or all together Then combine them as a stack

Image>Stacks>Images to Stack

Check use tiltes as labels

then convert the Stack to a T serie

Image>Hyperstacks>Stacks to Hyperstacks

Slices (z)=1 Frames (t)=number that was in z and you have replaced by 1

In my experience proprietary softwares do not encode X and Y values in the metadata properly so this method is not often used

Type: Positions from file

Use this is you want to use the image metadata to define the tile positions. This only works if you have one single input file with all the tiles inside. In my experience this works when the file issaved under the acquisition software proprietary format.

You can also use this type of stitching if you have an additional text files defining the position of each image. You can also create this file yourself

# Define the number of dimensions we are working on dim = 3 # Define the image coordinates (in pixels) img_01.tif; ; (0.0, 0.0, 0.0) img_05.tif; ; (409.0, 0.0, 0.0) img_10.tif; ; (0.0, 409.0, 0.0) img_15.tif; ; (409.0, 409.0, 0.0) img_20.tif; ; (0.0, 818.0, 0.0) img_25.tif; ; (409.0, 818.0, 0.0)

Notes

The higher the overlap the more computing power required.

Percentage overlap is approximate. Starts low and increase until result is satisfying

It is much easier to stitch when the layout is known.

It is much easier to stitch images when there are many visible features: slide of tissue is easier than sparse cell culture; bright field images are easier than fluorescence images

Renaming your files

You can easily rename files on a mac using Automator.

On a PC you can use Bulk Rename Utility

More information

https://imagej.net/plugins/image-stitching

Introduction

Microscopy is an approximation of reality. The point spread function is the better example of this: A single point will appear as a blurry ellipse using photonic microscopy. This transformation depends on the optical components which varies with time.

Quality control monitors this transformation over time.

Download MetroloJ QC from GitHub - MontpellierRessourcesImagerie/MetroloJ_QC

Download iText Library v 5.5.13 from https://repo1.maven.org/maven2/com/itextpdf/itextpdf/5.5.13.2/itextpdf-5.5.13.2.jar

Download ImageJ Download (imagej.net) or FIJI Fiji Downloads (imagej.net)

Open ImageJ or FIJI

Install MetroloJ QC and iTextPDF by dropping the .jar file into ImageJ status bar

Start up the microscope

Follow the set up procedure (load the test sample and focus with the lowest magnification objective).

Adjust to obtain a Kholer illumination

Remove the sample from the microscope

Nikon Ti2 without occular a shadow appears on the upper left corner of the FOV

Take a BF image for Widefield illumination Homogeneity

Analyze the image using MetroloJ QC and Check centering accuracy and total homogeneity

4x

Here the centering is off to the left side and the intensity is falling below 30%.

replace the illumination arm by the application screenlight

removing the objective:

If image is similar then the issue comes from the detection side.

Test all items on the detection path: Objective, filter cubes, lightpath selector, camera

Objective 20x-075

It improve the hopmogeneity but centering is still off

60x1.4 gives a perfect homogeneity

Repeat for each objective

Change objective

Adjust Kholer

Take a BF image for Widefield illumination Homogeneity

If illumination is not homogeneous then

Conclusion: Camera may be re-aligned to obtain an homogenous illumination in bright field.

5408nm laser line not working used for YFP. Laser is shining outside the fiber but not reachingh the objective. Has the cube been changed?

Immersion Oil

Very important component. Mostly used for oil immersion objectives, the refractive index should match the RI of glass from the coverslip and the objectives used. Different types or grades are available A (Low viscosity), B (high viscosity), N, F, FF (for fluorescence) etc... as well as different viscosity. Because the refractive index (and viscosity) vary with the temperature you should buy immersion oil matching the room temperature. Usually 23C but you could also need 30C and 37C.

To date Cargille provide the best options

Cargille

- Cargille Immersion Oil type FF #16212, 16 oz (473mL), 94$, 0.2$/mL RI=1.48

- Cargille Immersion Oil type HF #16245, 16 oz (473mL), 94$, 0.2$/mL RI=1.51

- Cargille Immersion Oil type LDF #16241, 16 oz (473mL), 94$, 0.2$/mL RI = 1.51

Extremely low fluorescence is achieved by Type LDF and Type HF. Type FF is virtually fluorescence-free, though not ISO compliant. Type HF is slightly more fluorescent than Type LDF, but is halogen-free.

Thorlabs

- MOIL-30 Olympus Type F, 30mL, $84, 2.8$/mL

- MOIL-20LN Leica Type N, 20mL, $75, 3.75$/mL

- OILCL30 Cargille Type LDF, 30mL, $28, 1.1$/mL RI=1.51

- MOIL-10LF Leica Type F, 10mL, $59, 5.9$/mL

https://www.thorlabs.com/newgrouppage9.cfm?objectgroup_id=5381

Zeiss

- Immersion Oil Immersol 518F 20mL RI:1.518 t 23C - 444960-0000-000 Prices varie from 56$ to 156$ (and even 580$/each); 2.8$ to 7.8$/mL https://www.micro-shop.zeiss.com/en/ca/accessories/consumables

- 30C 444970-9000-000

- 37C 444970-9010-000

Edmund Optics

- Olympus Low fluorescence immersion oil type F, #86-834, 30mL, 113$; 3.8$/mL https://www.edmundoptics.ca/p/olympus-low-auto-fluorescence-immersion-oil/29243/

Lens Cleaner

Use a lens cleaner to clean objective lenses from oil.

Tiffen

Tiffen is a great product designed for camera lenses but it works great for microscope optics as well

- Tiffen Lens Cleaner 16oz (472mL) #EK1463728T 14$ 3cts/mL https://tiffen.com/products/tiffen-lens-cleaner-16oz

Edmund Optics

- Lens Cleaner #54-828 (8oz, 236mL) 14$; 6 cts/mL

- Purosol #57-727 (4oz, 115mL), 29$, 25 cts/mL

Zeiss, Nikon, Olympus, Leica

- Home made recipe: 85% n-hexane analytical grade, 15% isopropanol analytical grade

Lens tissue

Edmund Optics

- Lens Tissue #60-375 500 sheets 36$ 7cts/sheet

Thorlabs

- Lens Tissue #MC-50E 1250 sheets 93$ 7.6cts/sheet

Tiffen

- Lens Tissue #EK1546027T 250 sheets 112$ 44cts/sheet

Liquid light guides

Excelitas

- 3mm core diameter, 1.5m length sold by Digikey #1601-805-00038-ND 682$

Thorlabs

- 3mm core diameter, 1.2m length #LLG03-4H 410$

- 3mm core diameter, 1.8m length #LLG03-6H 490$

Edmund Optics

- 3 mm core diameter, 1.8m length #53-689 3mm 700$

- Adapters available #66-905

Microscope world

- Liquid Light Guide 3mm core diameter, 1.5m length #805-00038 445$

Bulbs

AVH Technologies

HXP R 120W/45C 780$

OSRAM

XP R 120 W/45 #69119

Others consumables

- Cotton swab

- Absorbent polyester swabs for cleaning optical components, Alpha, Clean Foam or Absorbond series TX743B) from www.texwipe.com

- Rubber Blower GTAA 1900 from Giottos www.giottos.com

Let's say you have many images taken the same way from two different samples: One Control group and One test group.

What will/should you do to analyse them?

The first thing I would do will be to open a random pair of image (one from the control and one from the test) and have a look at them...

Control Test

Then I would normalize the brightness and contrast for each channel and across images to make the display settings the same for both images.

Then I would look at each channel individually and use a LUT Fire to have better appreciate the intensities.

Finally I will use the syncrhonize Windows features to navigate the two images at the same time

ImageJ>Windows>Synchronize Windows

ou dans une macro

run("Synchronize Windows");

Control Ch1 Test Ch1

Control Ch2 Test Ch2

To my eyes the Ch1 and Ch2 are slighly brighter in the Test conditions.

Here we can't compare Ch1 to Ch2 because the range of display are not the same. It seems than Ch2 is much weaker than Ch1 but it is actually not accurate. The best way to sort this is to add a calibration bar to each channel ImageJ>Tools>Calibration Bar. The calibration bar is non-destructive as it will be added to the overlay. To "print" it on the image you can flatten it on the image ImageJ>Image>Overlay>Flatten.

Control Ch1 Control Ch2

If you apply the same display to Ch1 and Ch2 then you can see that Ch1 overall more intense while Ch2 has few very strong spots.

Control Ch1 Control Ch2 with same display than Ch1

Looking more closely

In Ch1 we can see that there is some low level intensity and a high level circular foci whereas in Ch2 there is a bean shaped structure. In the example below the Foci seems stronger in the control than the Test condition.

Control Ch1 Test Ch1

Control Ch2 Test Ch2

But we need obviously to do some quantification to confirm or infirm these first observations.

The first way to address it would be in a bulk fashion: By measuring the mean intensity for example for all the images.

If all works fine you should have a CSV file you can open with your favorite spreadsheet applications. This table should give one line per image and all available measurements for the whole image and for each channel of the image. Of course some measurements will be all the same because the images were taken in the same way.

What to do with the file? Explore the data and see if there is any relevant information.

My view would be to use a short script in R to plot all the data and to some basic statistics

This should give you a pdf file with one plot per page. You can scroll it and look at the data. p-values from t-test are indicated on the graphs. As you can see below the mean intensity in both Ch1 and Ch2 are higher in the test than the control. What does it mean?

It means than the average pixel intensity is higher in the test conditions than the control condition.

Other values that are significantly different:

- Mean Intensity Ch1 and Ch2 (Control<Test)

- Maximum Intensity of Ch1 (Control>Test) Brightest value in the image

- Integrated Intensity of Ch1 and Ch2(Control<Test) It is equal to the Mean x Area

- Median Ch1 and Ch2 (Control<Test)

- Skew of Ch1 (Control>Test): The third order moment about the mean. Relate to the distribution of intensities. If=0 then intensities are symmetrically distributed around the mean. if<0 then distribution is asymmetric to the Left of the mean (lower intensities), if>0 then it is to the right (higher intensities).

- Raw Integrated Intensities of Ch1 and Ch2 (Control<Test) Sum of all pixel intensities

Now we start to have some results and statistically relevant information about the data. The test condition have a higher mean intensity (integrated intensity, median and raw integrated intensities are all showing the same result) for both Ch1 and Ch2. This is surprising because I had the opposite impression while looking at the image with normalized intensities and LUT fire applied (see above). Another surprising result is the fact that Control images have a higher maximum intensity than Test images but only for Ch1. This is clearly seen in the picture above.

One thing that can explain these results is that the number of cells can be different in the control vs the test images. If there are more cells in one condition then there are more pixels stained (and less background) and the mean intensity would be higher not because the signal itself is higher in each cell but because there are more cell...

To solve this we need to count the number of cells per image. This can be done manually or using segmentation based of intensity.

Looking at the image it seems that Ch1 is a good candidate to segment each cell.

This macro starts to be a bit long but to summarize here are the steps:

- Open each image

- Remove the offset from the camera (500 GV) and apply a rolling ball background subtraction

- Create a RGB composite regrouping both channels

- Convert this RGB to a 16-bit image

- Threshold the RGB image using the Otsu alogrythm

- Process the binary to improve detection (Open, Dilate, Fill Hole)

- Analyze particles to detect cells with size=2-12 circularity=0.60-1.00

- Add the results to the ROI manager

- Save the Mask for the control of segmentation

- Save an RGB image with the detection overlay as a control for the good detection

- Save the ROIs

- Use the ROIs to collect all measurements available and save the result as a csv

- Save the image with the background removed

- Crop each ROI from the image with the Background removed to isloate each cell

Few notes:

The camera offset is a value the camera adds to avoid having negative value due to noise. The best way to measure it is to take a dark image and have the mean intensity of the image. If you don't have that in hand you can choose a value sligly lower than the lowest pixel value found in your images.

The rolling ball background subtraction is powerfull tool to help with the segmentation

The values for the Analyze particles detection are the tricky part here. How I choose them? I use the thresholded image (binary) and I select the average guy: the spot that looks like all the others. I use the wand tool to create a selection and then I do Analyze>Measure This will give me a good estimate of the size (area) and the circularity. Then I select the tall/skinny and the short/not so skinny guys: I use again the wand tool to select the spots that would be my upper and lower limits. This will give me the range of spot size (area) and circularity. This is really a critical step that should be performed by hand prior running the script. You should do this on few different images to make sure the values are good enough to account for the variability you will encounter.

Now it is time to check that the job was done properly.

Looking at the control Images the detection isn't bad at all.

The only thing missing are the individual green foci seen below. Those cells look different as the FOci is very strong but there is not much fluorescence elsewhere (no diffuse green and no red). I might discuss with the scientist to see if it is OK to ignore them. If not I would need to change the threshold values and the detection parameters but let's say it is fine for now.

So now you should have a list of files (images, ROIs, and CSV files). We will focus on the CSV files has they contain the number of cells we are looking for and a lot more information we can also use.

We will reuse the previous R script but will add a little part to merge all the CSV files from the input folder.

This script will save the merged data in a single csv file. Usign your favourite spreadsheet manager you will be able to create a table and summarize the data with a pivot table to get the number of cells per group.

| Control | Test |

| 1225 | 1360 |

There are slighly more cells in the test than in the control. If we look at the number of detected cells per image we can confirm that there are more cells in the test conditions than in the control conditions. There are two images that have less celss than others in the control. We can go back to the detection to check those.

Looking at the detection images we can confirm that the two images from the control have less cells, so it is not a detection issue.

Together these results show that there is no more cells in one condition than the other.

On avera between 60 and 65 cells are detected by image with a total of 1200 cells per condition detected.

Then we can look at the graphs and look for what is statistically different, here is the short list

- Area Test>Control

- Mean Ch2 Test>Control

- Min Ch2 Test>Control

- Max Ch1 Control>Test

- Max Ch2 Test>Control

- Perimeter Test>Control

- Width and Height Test>Control

- Major and Minor Axis Test>Control

- Feret Test>Control

- Integrated Density Ch1 et Ch2 Test>Control

- Skew et Kurt Ch1 Control>Test

- Raw Integrated density of Ch2 Test>Control

- Roundness Control>Test

Looking at p-values (statistical significance) is good approach but it is not enough. We should also look at the biological significance of the numbers. For example the roundess is statiscially different between the control and test but this difference is really small. What does it mean biologically that the test cells are a tiny bit less circular. In this specific case : nothing much. So we can safely forget about it to focus on more important things.

This can easily be done since we have generated a CSV file gathering all the data Detected-Cells_All-Measurements.csv

Then for all the variables that are statically different between control and test groups we can have a closer look at the data.

- Area Control 5.03um2 ; Test 5.35um2 p-value = 1.7e−07

This is a relatively small increase 6%. If we bring this back to the diameter it is even smaller 3% increase. Yet the most relevant value in my view is the volume because cells are spheres in the real life. This increase is about a 10% increase in the cell volume. This is relevant information. Cells in the Test conditions are 10% bigger than in the control condition. - Mean Ch2 Control 1078 GV; Test 1197 GV p-value < 2.2e−16

This means that the bean shape structure labelled by Ch2 is 10% brighter in the test cells than in the controls. Yet the segmentation was performed on the full cell using Ch1 as a proxy. This result prompt for a segmentation based on Ch2. Are the bean shaped bigger or brighter? - Min Ch2 Control 198 GV; Test 213 GV p-value 1e−05

The difference in the minimum of Ch2 represent about 7.5% increase. If the minimum is higher it suggests that there is a global increase in the fluorescent of Ch2 and not a redistribution (ie clusterization). The segmentation on the Ch2 might sort out which option is really occurring - Max Ch1 Control 10111 GV; Test 8393 GV p-value < 2.2e−16

This difference represent a 17% decrease of the maximum intensity of Ch1. If we remember Ch1 has two fluorescent levels, low within the nuclei and an intense foci. Here the maximum represent the Foci only. So we can conclude that the Foci are less intense in the test compare to the control. - Max Ch2 Control 3522 GV; Test 3761 GV p-value = 0.00035

Here we have the opposite situation where in the bean shape structure the maximum intensity is higher in the test condition than the control - Perimeter Control 8.45um ; Test 8.74um p-value = 1.7e−08

Here we have another measurement about the size of the detected cells. Using the area we can estimate about a 3.1% perimeter increase. Here the increase is about 3.5%. This is consistant meaning the increase in area and perimeter are about the same. - Width and Height Control 2.49um; Test 2.57um p-value = 8e−07

Again here another measurement of the size of the detected cells which are larger in the test than the control - Major and Minor axis length Control 2.73um; Test 2.84um p-value = 2.7e−10

Similar to above excepted that instead of measuring the width and heigh of a rectangle around the cell it is an ellipse fitting the cell. In my view this would be more relevant variable than the previous one but since all data converge to slightly larger cells in the test than the control we can focus on the most interesting ones (area or perimeter) - Feret Control 2.95um; Test 3.05um p-value = 5.1e−10

This is the maximum distance between two points of the detected cells. It relates to the shape of the cell - Integrated Density Ch1 Control 11296 GV; Test 11886 GV p-value

This is the product of area and mean intensity. We know that the area is larger but not the mean intensity. Yet the integrated density of Ch1 is sligtlhy larger (5%) in test vs control. - Integrated Density Ch2 Control 5570 GV; Test 6548 p-value < 2.2e−16

Here we have a 17% increase which represent the combination of increase of cell size and mean intensity of Ch2 - Skew of Ch1 Control 2.47; Test 1.77 p-value < 2.2e−16

The skewness refers to the asymmetrical shape of the distribution of the intensities. Since values are above 0 it means that the distribution is skewed towards the higher intensities. Since this number is lower in the test cells, it means that the distribution of Ch1 intensity is less asymmetric. This can be easily explained by looking at Ch1 which has a low intensity within the nuclei and a strong foci. The foci high intensities provides a higher skew number. Since the skew is less closer to 0 in test conditions this could mean that the foci is less intense or that the fluorescence of Ch1 is more evenly distributed. Since we don't have an increase nor a decrease of mean intensity in Ch1 it is likely that the fluorescence is more evenly distributed in the test condition Thant the control. In other words it seems that in the control conditions the foci is brighter by taking some of the low fluorescence that is present in the nuclei. In other words, it seems that the test conditions can't make very bright foci and that the Ch1 fluorescence is more evenly distributed. - Kurt of Ch1 Control 11.3; Test 7.24 p-value < 2.2e−16

The Kurt account for the flatness of the distribution. If Kurt=0 we have a normal distribution, if <0 it is flatter than normal; if >0 it is more peaked. In this case the data is very very peaked in both control and test. This is quite interesting especially when looking at the violin graphs below. In the control we can see 2 peaks one close to 0 and one close to 11. This means that there are two kinds of cells in the control condition, the one with a foci and the one with only low level distributed Ch1 fluorescence. In the test conditions the Kurt are entered and the two peaks are so close that they almost merged. - Raw Integrated density of Ch2 Control 313299 vs Test 368339; p-value < 2.2e−16

This is the sum of the pixel intensities. It is higher in the test cell than the control cell. This can be because the intensity is higher and/or the area is larger. In this case we have seen previously that the area is larger. Interestingly Raw intensity is higher for Ch2 but is the same for Ch1. - Roundness Control 0.84; Test 0.83 p-value = 0.0032

As said before even though the value is significant the difference here is minimal and we can easily go to focus on another

As we have seen here it seems that Ch1 and Ch2 have defined structrures that differs between the Control and test group. Our analysis looking at each cell does not provide enough detail on each structure. More specifically it can't discriminate between the low intensity Ch1 and the high intensity Foci. Also it looks at the overall fluorescence of Ch2 in the cell while we can clearly identify a bean shape structure. The next step would be to segment the images in 3 ways: high Ch1 intensities would correspond the the foci, high Ch2 intensities would correspond to the bean shape structure and low Ch1 intensities (all Ch1 intensities but excluding the high intensities from the Foci) would relate to the overall nuclei

What are the parameters to choose a good objective

What is the strehl ratio?

Screw type

RMS (Royal Microscopical Society objective thread)

M25 (metric 25-millimeter objective thread)

M32 (metric 32-millimeter objective thread).

Less common or older

M27 x0.75

M27x1.0

Working distance

Immersion

Numerical aperture

Optical Correction

Transittance

Others

Tubelength

Adjustment

I get often questions about how to write the Marterial and Method section of a manuscript including microscopy data. The diversity of technologies and applications in the field of microscopy may be complicated for some users and help of a microscopy specialist is often required.

Here I would like to present a usefull tool called MicCheck that provides a checklist of what to include in the material and method section of a manuscript including microscopy data. MicCheck is a free tool to help you. It is described in details in the following article.

Montero Llopis, P., Senft, R.A., Ross-Elliott, T.J. et al. Best practices and tools for reporting reproducible fluorescence microscopy methods. Nat Methods 18, 1463–1476 (2021). https://doi.org/10.1038/s41592-021-01156-w

Received

Accepted

Published

Issue DateDecember 2021