- Created by Nicolas Stifani, last updated on Dec 17, 2024 22 minute read

You are viewing an old version of this page. View the current version.

Compare with Current View Page History

« Previous Version 41 Next »

Table of Contents

Illumination Warmup Kinetic

When starting light sources, it takes time to reach a stable steady state. This duration is known as the warmup period. It is critical to record a warmup kinetic at least once a year to accurately define this period.

Acquisition protocol

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source and immediately record the power output over time (every 10 seconds for 1 hour is a good start but can be adjusted) until it stabilizes

I personally record every 10 seconds for 24 hours but stops when it has been stable for 1 h.

- Repeat steps 3 to 5 for each light source you wish to monitor

Keep the light source ON at all time. Depending on the hardware the light sources can be ON all the time or can be shutdown automatically by the software when not in used.

Results

Fill in the orange cells in the following spreadsheet template Illumination Warmup Kinetic_Template.xlsx to visualize your results.

For each light source plot the measured power output (mW) over time.

Calculate the relative power: Relative Power = Power/MaxPower and plot the Relative Power (%) over time.

We observe some variability in the power output for the 385nm light source.

To assess stability, define a Stability Duration Window (e.g., 10 minutes), which represents the time period over which the power output should remain stable. Additionally, specify a maximum Coefficient of Variation (CV) threshold that indicates acceptable variability within the chosen window (e.g., 0.01%).

The Coefficient of Variation (CV) can be calculated using the formula: CV = StdDev / Mean for the given duration. Calculate the CV for the specified duration window and plot it over time to visualize the stability of the power output.

We observe that the light sources stabilize quickly, within less than 10 minutes, while the 385nm a light source takes approximately 41 minutes to reach stability.

Report the results in a table

Stability Duration Window (min): 10 min; Maximum Coefficient of Variation: 0.01%

| 385nm | 475nm | 555nm | 630nm | |

| Stabilisation time (Max CV 0.01% for 10 min) | 41 | 3 | 3 | 8 |

| Stability Factor (%) Before Warmup | 99.7% | 99.9% | 100.0% | 100.0% |

| Stability Factor (%) After Warmup | 100.0% | 100.0% | 100.0% | 99.9% |

Conclusion

The illumination warm-up time for this instrument is approximately 40 minutes. This duration is necessary for quantitative measurements, as the Coefficient of Variation (CV) threshold is strict, with a maximum allowable variation of 0.01% within a 10-minute window.

Maximum Power Output

This measure evaluates the maximum power output of each light source, considering both the quality of the light source and the components along the light path. Over time, we anticipate a gradual decrease in power output, accounting for the aging of the hardware, including the light source and other optical components.

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the average power output for 10 secondsI personally re-use the data collected at the end of the warmup kinetic experiment

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template Maximum Illumination Power Output_Template.xlsx to visualize your results. For each light source plot the measured maximal power output (mW).

Plot the maximal power output (mW) measured and compare it to the specifications from the manufacturer. Calculate the relative power: Relative Power = Measured Power / Specifications.

Report the results in a table

| Manufacturer Specifications (mW) | Measurements 2024-11-22 (mW) | Relative Power (%) | |

| 385nm | 150.25 | 122.2 | 81% |

| 470nm | 110.4 | 95.9 | 87% |

| 555nm | 31.9 | 24 | 75% |

| 630nm | 52 | 39.26 | 76% |

Conclusion

This instrument provides 80% of the power given by the manufacturer specifications. These results are consistent because the manufacturer specifications are using a different objective and likely different filters and mirrors.

Illumination stability

The light sources used on a microscope should be constant or at least stable over the time scale of an experiment. For this reason power stability is recorded over 4 different time-scale:

- Real-time illumination stability: Continuous recording for 1 min. This represents the duration of a z-stack acquisition.

- Short-term illumination stability: Every 1-10 seconds for 5-15 min. This represents the duration to acquire several images.

- Mid-term illumination stability: Every 10-30 seconds for 1-2 hours. This represents the duration of a typical acquisition session or short time-lapse experiments. For longer time-lapse experiments, longer duration may be used.

- Long-term illumination stability: Once a year or more over the lifetime of the instrument.

Real-time illumination stability

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the power output as fast as possible for 1 minute

I personally acquire this data right after the warmup kinetic experiment (and without turning off the light source).

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template Illumination Stability_Template.xlsx to visualize your results.

For each light source plot the measured power output (mW) over time.

Calculate the relative power: Relative Power = Power/MaxPower and plot the Relative Power (%) over time.

Calculate the Stability factor S (%) = 100 x (1- (Pmax-Pmin)/(Pmax+Pmin)) and the coefficient of Variation CF = StdDev/Mean.

Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 99.99% | 0.002% |

| 475nm | 99.99% | 0.002% |

| 555nm | 99.97% | 0.004% |

| 630nm | 99.99% | 0.002% |

Conclusion

The light sources are highly stable (>99.9%) during a 1 min period.

Short-term illumination stability

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the power output every 10 seconds for 15 minutes

I personally re-use the data collected during the warmup kinetic experiment.

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template Illumination Stability_Template.xlsx to visualize your results.

For each light source plot the measured power output (mW) over time.

Calculate the relative power: Relative Power = Power/MaxPower and plot the Relative Power (%) over time.

Calculate the Stability factor S (%) = 100 x (1- (Pmax-Pmin)/(Pmax+Pmin)) and the coefficient of Variation CF = StdDev/Mean.

Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 100.00% | 0.000% |

| 475nm | 100.00% | 0.002% |

| 555nm | 100.00% | 0.003% |

| 630nm | 99.99% | 0.004% |

Conclusion

The light sources are highly stable (>99.9%) during a 15 min period.

Mid-term illumination stability

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the power output every 10 seconds for 1 hour

I personally re-use the data collected during the warmup kinetic experiment.

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template Illumination Stability_Template.xlsx to visualize your results.

For each light source plot the measured power output (mW) over time.

Calculate the relative power: Relative Power = Power/MaxPower and plot the Relative Power (%) over time.

Calculate the Stability factor S (%) = 100 x (1- (Pmax-Pmin)/(Pmax+Pmin)) and the coefficient of Variation CF = StdDev/Mean.

Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 99.98% | 0.013% |

| 475nm | 99.98% | 0.011% |

| 555nm | 99.99% | 0.007% |

| 630nm | 99.97% | 0.020% |

Conclusion

The light sources are highly stable (>99.9%) during a 1 h period.

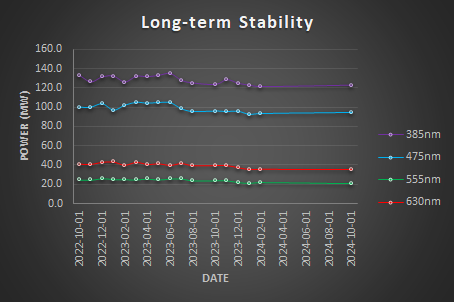

Long-term illumination stability

Long-term illumination stability measures the power output over the lifetime of the instrument.

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the maximal power output

I personally re-use the data collected for the maximal power output over time.

- Repeat steps 5 to 7 for each light source/wavelength

- Repeat every month

Results

Fill in the orange cells in the following spreadsheet template Illumination Stability_Template.xlsx to visualize your results.

For each light source plot the measured power output (mW) over time.

Calculate the relative power: Relative Power = Power/MaxPower and plot the Relative Power (%) over time.

Calculate the Stability factor S (%) = 100 x (1- (Pmax-Pmin)/(Pmax+Pmin)) and the coefficient of Variation CF = StdDev/Mean.

Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 94.51% | 3.49% |

| 475nm | 93.59% | 4.42% |

| 555nm | 88.96% | 6.86% |

| 630nm | 89.46% | 6.71% |

Conclusion

The light sources are somehow stable over the last 2 years but a decrease in the maximum power output is seen.

Illumination stability conclusions

Real-time 1 min | Short-term 15 min | Mid-term 1 h | |

385nm | 99.99% | 100.00% | 99.98% |

475nm | 99.99% | 100.00% | 99.98% |

555nm | 99.97% | 100.00% | 99.99% |

630nm | 99.99% | 99.99% | 99.97% |

The light sources are highly stable (>99.9%).

Illumination Input-Output Linearity

This measure compares the power output when the input varies. We expect a linear relationship between the input and the power output.

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 0%, 10, 20, 30…, 100%

I personally collect this data after the warm-up kinetic, and right after collecting the real-time power stability.

- Record the power output for each input

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template Illumination Power Linearity_Template.xlsx to visualize your results.

For each light source plot the measured power output (mW) function of the input (%).

Calculate the relative power: Relative Power = Power/MaxPower and plot the Relative Power (%) function of the input (%).

Determine the equation for each curve, typically a linear relationship of the form Output = K × Input. Report the slope (K) and the coefficient of determination (R²) in a table

Illumination Input-Output Linearity | ||

Slope | R2 | |

385nm | 0.9969 | 1 |

475nm | 0.9984 | 1 |

555nm | 1.0012 | 1 |

630nm | 1.0034 | 1 |

Conclusion

The light sources are highly linear. The slopes are very close to 1 and R2 equal 1

Objectives and Cubes transmittance

Since we are using a power meter we can easily assess the transmittance of the objectives and the filter cubes. This measure compares the power output when different objectives and cubes are in the light path. It evaluates the transmittance of each objective and compares it with the manufacturer specifications. It can detect defects or dirt on objectives.

Objectives transmittance

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the power output for each objective as well as without objective

I personally collect this data after the warm-up kinetic, after collecting the real-time power stability and right after collecting the power output linearity

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template to visualize your results.

For each objective plot the measured power output (mW) function of the wavelength (nm).

Calculate the relative transmittance: Relative Transmittance = Power/PowerNoObjective and plot the Relative Transmittance(%) function of the wavelength (nm).

Calculate the average transmittance for each objective and report it in a table.

ompare the average transmittance to the specification provided by the manufacturer.

Here we see that the measurements are close to the specification at the exception of the 63x-1.4 objective. This is expected because the 63x objective has a smaller back aperture which reduces the amount of light received. You can also compare the complete transmittance curves

Conclusion

The objectives are transmitting light properly.

Cubes transmittance

Acquisition protocol

- Warmup the light sources (see previous section for the required duration)

- Place a power meter sensor (e.g., Thorlabs S170C) on the stage

- Center the sensor and the objective

- Zero the sensor to ensure accurate readings

- Select the wavelength of the light source you wish to monitor using your power meter controller (e.g., Thorlabs PM400) or software

- Turn on the light source to 100%

- Record the power output ffor each filter cube

I personally collect this data after the warm-up kinetic, the real-time power stability and right after collecting the power output linearity

- Repeat steps 5 to 7 for each light source/wavelength

Results

Fill in the orange cells in the following spreadsheet template to visualize your results.

FFor each filter cube plot the measured power output (mW) function of the wavelength (nm).

Calculate the relative transmittance: Relative Transmittance = Power/PowerofMaxFilter and plot the Relative Transmittance(%) function of the wavelength (nm).

Calculate the average transmittance for each filter at the appropraite wavelengths and report it in a table.

ompare the average transmittance to the specification provided by the manufacturer.

- The DAPI cube only transmits 14% of the excitation light compared to the Quad Band Pass DAPI/GFP/Cy3/Cy5. It is usable but will provide a low signal. This likely because of the excitation filter within the cube is not properly matching the light source. This filter could be removed since an excitation filter is already included within the light source.

- The GFP and DsRed cubes transmit 47% of the excitation light compared to the Quad Band Pass DAPI/GFP/Cy3/Cy5 transmits. It works properly.

- The DHE cube does not transmit any light from the colibri. This cube could be removed and stored.

- The Cy5 cube transmit 84% compared to the Quad Band Pass DAPI/GFP/Cy3/Cy5. It works properly.

Conclusion

Actions have to be taken for the DAPI and DHE.

XYZ Drift

This experiment assesses the stability of the system in the XY and Z directions. As previously noted, when an instrument is started, it requires time to reach a stable steady state, a phase known as the warmup period. To accurately determine this duration, it is essential to record a warmup kinetic at least once a year.

Acquisition protocol

Place 4 µm diameter fluorescent beads (TetraSpec Fluorescent Microspheres Size Kit, mounted on a slide) on the stage

Center the sample under a high-NA dry objective

Select an imaging channel (e.g., Cy5)

Acquire a large Z-stack every minute for 24 hours

It is crucial to account for potential drift in the Z-axis by acquiring a Z-stack that is significantly larger than the visible bead size (e.g., 40 µm)

Results

- Use the TrackMate plugin for FIJI to detect and track spots over time

- Apply Difference of Gaussians (DoG) spot detection with a detection size of 4 µm

- Set a quality threshold greater than 20 and enable sub-pixel localization for increased accuracy

- Export the detected spot coordinates as a CSV file for further analysis

Fill in the orange cells in the following spreadsheet template XYZ Drift Kinetic_Template.xlsx. to visualize your results. Just copy paste XYZT and Frame columns from trackmate spots CSV file to the orange column in the XLSX file. Fill in the NA and Emission wavelength used.

Calculate the relative displacement in X, Y and Z: Relative Displacement = Position - PositionInitial and plot the relative displacement over time.

We observe an initial drift that stabilizes over time in X (+2 um), Y (+1.3 um) and Z (-10.5 um).

Calculate the displacement 3D Displacement = Sqrt( (X2-X1)2 + (Y2-Y1)2) + (Z2-Z1)2 ) and plot the displacement over time. Calculate the resolution of your imaging configuration, Lateral Resolution = LambdaEmission / 2*NA and plot the resolution over time (constant).

Identify visually the time when the displacement is lower than the resolution of the system. On this instrument it takes 120 min to reach its stability.

Calculate the velocity, Velocity = (Displacement2-Displacement1)/T2-T1) and plot the velocity over time.

Calculate the average velocity before and after stabilisation and report the results in a table

| Objective NA | 0.5 |

| Wavelength (nm) | 705 |

| Resolution (nm) | 705 |

| Stabilisation time (min) | 122 |

| Average velocity Warmup (nm/min) | 113 |

| Average velocity System Ready (nm/min) | 14 |

Conclusion

The warmup time for this specific instrument is about 2 hours. The average displacement velocity after warmup is 14 nm/min which is acceptable.

XYZ Repositioning accuracy

This experiment evaluates how accurate is the system in XY by measuring the accuracy of repositioning. Several variables can affect repositioning accuracy: i) Time, ii) Traveled distance, iii) Speed and iv) acceleration.

Acquisition protocol

- Place 4 um diameter fluorescent beads (TetraSpec Fluorescent Microspheres Size Kit mounted on slide) on the stage.

- Center the sample under a high NA dry objective.

- Select an imaging channel (e.g., Cy5)

Acquire a Z-stack at 2 different positions separated by 0 um, 1 um, 10 um, 100 um, 1 000 um, 10 000 um, 80 000um in X and Y direction

Repeat the acquisition 20 times

Be careful your stage might have a smaller range!

Be careful not to damage the objectives (lower the objectives during movement)

I recommend to acquire 3 dataset for each condition.

Results

- Use the TrackMate plugin for FIJI to detect and track spots over time

- Apply Difference of Gaussians (DoG) spot detection with a detection size of 1 µm

- Set a quality threshold greater than 20 and enable sub-pixel localization for increased accuracy

- Export the detected spot coordinates as a CSV file for further analysis

Fill in the orange cells in the following spreadsheet template XY Repositioning Accuracy_Template.xlsx. to visualize your results. Just copy paste XYZT and Frame columns from trackmate spots CSV file to the orange column in the XLSX file. Fill in the NA and Emission wavelength used.

This experiment shows the displacement in X, Y and Z after + and - 30mm movement in X and Y, repeated 20 times.

Report the results in a table.

| Objective NA | 0.5 |

| Wavelength (nm) | 705 |

| Lateral Resolution (nm) | 705 |

| X Accuracy (nm) | 195 |

| Y Accuracy (nm) | 175 |

| Z Accuracy (nm) | 52 |

| Repositioning Accuracy 3D (nm) | 169 |

| Repositioning Accuracy 2D (nm) | 178 |

Because several variables can affect repositioning accuracy (i) Time, ii) Traveled distance, iii) Speed and iv) Acceleration) we decided to test them. To do this we use the following code to automatically process an opened image in ImageJ/FIJI using the Trackmate plugin. It will save the spot detection as a CSV file on your Desktop.

# --------------------------

# User Prompt Toggle

# --------------------------

# Set this to False to skip the initial dialog and reuse previous settings

show_initial_prompt = True # Change this as needed

import os

import sys

import json

from ij import IJ, WindowManager

from java.io import File

from ij.gui import GenericDialog

from fiji.plugin.trackmate import Model, Settings, TrackMate, SelectionModel, Logger

from fiji.plugin.trackmate.detection import DogDetectorFactory

from fiji.plugin.trackmate.tracking.jaqaman import SparseLAPTrackerFactory

from fiji.plugin.trackmate.features import FeatureFilter

from fiji.plugin.trackmate.features.track import TrackIndexAnalyzer

from fiji.plugin.trackmate.gui.displaysettings import DisplaySettingsIO

from fiji.plugin.trackmate.gui.displaysettings.DisplaySettings import TrackMateObject

from fiji.plugin.trackmate.visualization.table import TrackTableView

from fiji.plugin.trackmate.visualization.hyperstack import HyperStackDisplayer

# Ensure UTF-8 encoding

reload(sys)

sys.setdefaultencoding('utf-8')

# Path to the config file that will store last user settings

config_file_path = os.path.join(os.path.expanduser("~"), "Desktop", "trackmate_config.json")

# Default settings in case there's no previous config file

default_settings = {

'subpixel_localization': True,

'spot_diameter': 4.0, # Default 0.5 microns

'threshold_value': 20.904, # Default threshold value

'apply_median_filtering': False

}

# Function to load settings from the config file

def load_settings():

if os.path.exists(config_file_path):

with open(config_file_path, 'r') as f:

return json.load(f)

else:

return default_settings

# Function to save settings to the config file

def save_settings(settings):

with open(config_file_path, 'w') as f:

json.dump(settings, f)

# Load previous settings (or use default if no file exists)

user_settings = load_settings()

# Create output directory on the user's desktop

output_dir = os.path.join(os.path.expanduser("~"), "Desktop", "Output")

if not os.path.exists(output_dir):

os.makedirs(output_dir)

# ----------------------------

# Create the model object

# ----------------------------

model = Model()

model.setLogger(Logger.IJ_LOGGER) # Send messages to the ImageJ log window

# ------------------------

# Create input dialog

# ------------------------

# Create the dialog box for user input

gd = GenericDialog("TrackMate Parameters")

# Add fields to the dialog, prefill with last saved values

gd.addCheckbox("Enable Subpixel Localization?", user_settings['subpixel_localization']) # Default: from saved settings

gd.addNumericField("Spot Diameter (microns):", user_settings['spot_diameter'], 2) # Default diameter: from saved settings

gd.addSlider("Threshold Value:", 0, 255, user_settings['threshold_value']) # Slider for threshold value (0-255 range, default: from saved settings)

gd.addCheckbox("Apply Median Filtering?", user_settings['apply_median_filtering']) # Default: from saved settings

gd.addCheckbox("Process All Open Images?", False) # Add a checkbox to select processing mode

# Show the dialog

gd.showDialog()

# Check if the user canceled the dialog

if gd.wasCanceled():

sys.exit("User canceled the operation.")

# Get user inputs from the dialog

subpixel_localization = gd.getNextBoolean() # Whether to enable subpixel localization

spot_diameter = gd.getNextNumber() # Spot diameter in microns

threshold_value = gd.getNextNumber() # Threshold value from the slider

apply_median_filtering = gd.getNextBoolean() # Whether to apply median filtering

process_all_images = gd.getNextBoolean() # Whether to process all open images

# Save the new settings to the configuration file

user_settings = {

'subpixel_localization': subpixel_localization,

'spot_diameter': spot_diameter,

'threshold_value': threshold_value,

'apply_median_filtering': apply_median_filtering

}

save_settings(user_settings)

# ------------------------

# Prepare settings object

# ------------------------

# If processing all images

if process_all_images:

open_images = WindowManager.getImageTitles()

total_images = len(open_images)

if total_images == 0:

IJ.log("No images are open!")

sys.exit("No images are open!")

for idx, title in enumerate(open_images):

imp = WindowManager.getImage(title)

# Ensure the image is not null

if imp is None:

continue

# Get the image title for naming output files

filename = imp.getTitle()

# Configure TrackMate for this image

settings = Settings(imp)

settings.detectorFactory = DogDetectorFactory()

settings.detectorSettings = {

'DO_SUBPIXEL_LOCALIZATION': subpixel_localization, # Set subpixel localization

'RADIUS': spot_diameter / 2, # Convert diameter to radius for detector

'TARGET_CHANNEL': 1, # Target channel (customize if needed)

'THRESHOLD': threshold_value, # User-defined threshold value

'DO_MEDIAN_FILTERING': apply_median_filtering, # Apply median filtering if selected

}

# Configure tracker

settings.trackerFactory = SparseLAPTrackerFactory()

settings.trackerSettings = settings.trackerFactory.getDefaultSettings()

settings.trackerSettings['ALLOW_TRACK_SPLITTING'] = True

settings.trackerSettings['ALLOW_TRACK_MERGING'] = True

# Add all known feature analyzers to compute track statistics

settings.addAllAnalyzers()

# Configure track filters

track_filter = FeatureFilter('TRACK_DISPLACEMENT', 10, False) # Filter tracks with displacement >10 pixels

settings.addTrackFilter(track_filter)

# -------------------

# Instantiate plugin

# -------------------

trackmate = TrackMate(model, settings)

# --------

# Process

# --------

if not trackmate.checkInput():

IJ.log("Error checking input: " + trackmate.getErrorMessage())

continue

if not trackmate.process():

IJ.log("Error during processing: " + trackmate.getErrorMessage())

continue

# ----------------

# Display results

# ----------------

selection_model = SelectionModel(model)

# Read the default display settings

ds = DisplaySettingsIO.readUserDefault()

ds.setTrackColorBy(TrackMateObject.TRACKS, TrackIndexAnalyzer.TRACK_INDEX)

ds.setSpotColorBy(TrackMateObject.TRACKS, TrackIndexAnalyzer.TRACK_INDEX)

# Display tracks and spots on the image

displayer = HyperStackDisplayer(model, selection_model, imp, ds)

displayer.render()

displayer.refresh()

# -----------------

# Export results

# -----------------

# Export spot table as CSV

spot_table = TrackTableView.createSpotTable(model, ds)

output_csv_path = os.path.join(output_dir, filename + "_spots.csv")

spot_table.exportToCsv(File(output_csv_path))

# Close the image after processing

imp.close()

# Update progress bar

IJ.showProgress(idx + 1, total_images)

else:

# If processing only the active image, leave it open after processing

imp = WindowManager.getCurrentImage()

if imp is None:

sys.exit("No image is currently open.")

filename = imp.getTitle()

# Configure TrackMate for this image

settings = Settings(imp)

settings.detectorFactory = DogDetectorFactory()

settings.detectorSettings = {

'DO_SUBPIXEL_LOCALIZATION': subpixel_localization, # Set subpixel localization

'RADIUS': spot_diameter / 2, # Convert diameter to radius for detector

'TARGET_CHANNEL': 1, # Target channel (customize if needed)

'THRESHOLD': threshold_value, # User-defined threshold value

'DO_MEDIAN_FILTERING': apply_median_filtering, # Apply median filtering if selected

}

# Configure tracker

settings.trackerFactory = SparseLAPTrackerFactory()

settings.trackerSettings = settings.trackerFactory.getDefaultSettings()

settings.trackerSettings['ALLOW_TRACK_SPLITTING'] = True

settings.trackerSettings['ALLOW_TRACK_MERGING'] = True

# Add all known feature analyzers to compute track statistics

settings.addAllAnalyzers()

# Configure track filters

track_filter = FeatureFilter('TRACK_DISPLACEMENT', 10, False) # Filter tracks with displacement >10 pixels

settings.addTrackFilter(track_filter)

# -------------------

# Instantiate plugin

# -------------------

trackmate = TrackMate(model, settings)

# --------

# Process

# --------

if not trackmate.checkInput():

IJ.log("Error checking input: " + trackmate.getErrorMessage())

if not trackmate.process():

IJ.log("Error during processing: " + trackmate.getErrorMessage())

# ----------------

# Display results

# ----------------

selection_model = SelectionModel(model)

# Read the default display settings

ds = DisplaySettingsIO.readUserDefault()

ds.setTrackColorBy(TrackMateObject.TRACKS, TrackIndexAnalyzer.TRACK_INDEX)

ds.setSpotColorBy(TrackMateObject.TRACKS, TrackIndexAnalyzer.TRACK_INDEX)

# Display tracks and spots on the image

displayer = HyperStackDisplayer(model, selection_model, imp, ds)

displayer.render()

displayer.refresh()

# -----------------

# Export results

# -----------------

# Export spot table as CSV

spot_table = TrackTableView.createSpotTable(model, ds)

output_csv_path = os.path.join(output_dir, filename + "_spots.csv")

spot_table.exportToCsv(File(output_csv_path))

# Show completion message

IJ.log("Processing Complete!")

This should create a lot of CSV Files that we need to be aggregated for the following analysis. The following script in R can process all csv files placed in an Output folder on your desktop.

# Load and install necessary libraries at the beginning

rm(list = ls())

# Check and install required packages if they are not already installed

if (!require(dplyr)) install.packages("dplyr", dependencies = TRUE)

if (!require(stringr)) install.packages("stringr", dependencies = TRUE)

if (!require(ggplot2)) install.packages("ggplot2", dependencies = TRUE)

if (!require(corrplot)) install.packages("corrplot", dependencies = TRUE)

# Load libraries

library(dplyr)

library(stringr)

library(ggplot2)

library(corrplot)

# Set default input and output directories

default_input_dir <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Output") # Default to "Output" on Desktop

InputFolder <- default_input_dir # Use default folder

# Specify the Output folder (this is a fixed folder on the Desktop)

OutputFolder <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Output")

if (!dir.exists(OutputFolder)) dir.create(OutputFolder, recursive = TRUE)

# List all CSV files in the folder

csv_files <- list.files(path = InputFolder, pattern = "\\.csv$", full.names = TRUE)

if (length(csv_files) == 0) stop("No CSV files found in the selected directory.")

header <- names(read.csv(csv_files[1], nrows = 1))

# Function to clean the filename

clean_filename <- function(filename) {

filename_parts <- strsplit(filename, " - ")[[1]]

cleaned_filename <- sub("\\.czi", "", filename_parts[1])

cleaned_filename <- sub("_spots", "", cleaned_filename)

cleaned_filename <- sub("\\.csv", "", cleaned_filename)

return(cleaned_filename)

}

# Read and merge all CSV files

merged_data <- csv_files %>%

lapply(function(file) {

data <- read.csv(file, skip = 4, header = FALSE)

colnames(data) <- header

data <- data %>% arrange(FRAME)

# Clean and add source file info

filename <- basename(file)

data$SourceFile <- clean_filename(filename)

# Extract variables from the filename

filename_parts <- strsplit(clean_filename(filename), "_")[[1]]

for (i in seq_along(filename_parts)) {

variable_name <- paste("Variable-", sprintf("%03d", i), sep = "")

data[[variable_name]] <- filename_parts[i]

}

# Add time columns if available

if ("POSITION_T" %in% colnames(data)) {

data$`Time (sec)` <- round(data$POSITION_T, 0)

data$`Time (min)` <- round(data$`Time (sec)` / 60, 2)

}

# Calculate displacement columns (X, Y, Z, 3D, 2D)

if ("POSITION_X" %in% colnames(data)) {

first_value <- data$POSITION_X[data$FRAME == 0][[1]]

data$`X (nm)` <- (data$POSITION_X - first_value) * 1000

}

if ("POSITION_Y" %in% colnames(data)) {

first_value <- data$POSITION_Y[data$FRAME == 0][[1]]

data$`Y (nm)` <- (data$POSITION_Y - first_value) * 1000

}

if ("POSITION_Z" %in% colnames(data)) {

first_value <- data$POSITION_Z[data$FRAME == 0][[1]]

data$`Z (nm)` <- (data$POSITION_Z - first_value) * 1000

}

# Calculate displacement (3D and 2D)

if (all(c("X (nm)", "Y (nm)", "Z (nm)") %in% colnames(data))) {

data$`Displacement 3D (nm)` <- sqrt(

diff(c(0, data$`X (nm)`))^2 +

diff(c(0, data$`Y (nm)`))^2 +

diff(c(0, data$`Z (nm)`))^2

)

data$`Displacement 3D (nm)`[1] <- 0

}

if (all(c("X (nm)", "Y (nm)") %in% colnames(data))) {

data$`Displacement 2D (nm)` <- sqrt(

diff(c(0, data$`X (nm)`))^2 +

diff(c(0, data$`Y (nm)`))^2

)

data$`Displacement 2D (nm)`[1] <- 0

}

return(data)

}) %>%

bind_rows()

# Save the merged data to a new CSV file

output_file <- "merged_data.csv"

write.csv(merged_data, file = file.path(OutputFolder, output_file), row.names = FALSE)

cat("All CSV files have been merged and saved to", output_file, "\n")

# Load merged data for visualization (or analysis)

This script calculates the relative position in X, Y and Z: PositionRelative= Position - PositionInitialfor each axes eind each file. It also calculates the 2D and 3D displacement: 2D_Displacement = Sqrt( (X2-X1)2 + (Y2-Y1)2)); 3D_Displacement = Sqrt( (X2-X1)2 + (Y2-Y1)2) + (Z2-Z1)2 ) and provides the results as CSV file merged_data.csv that can be further processed and summarized with a pivot table XY Repositioning Accuracy_Template_All-Files.xlsx

Repositioning accuracy vs Traveled Distance

Plot the 3D displacement for each condition function of the acquisition frame.

We observe here a high variability at the Frame 1 (2nd image). This variability can comes from the X, Y or Z axis. I can also come from a combination of those 3. We now plot the displacement in each direction function of the Frame.

We observe that the X axis is contributing to the high variability of the first frame. Ploting the 3D displacement as a scatter dotplot for each condition and repeat.

We observe that the recorded data is consistent at the exception of a single value per condition.

In these graphs we observe the variability per experiment. Notice that most of the experiment show a shift in X and some in Y. Data is then more consistent.

Travelled distance significantly affect the repositioning accuracy at 1mm, 10mm and 30mm.

Repositioning accuracy vs Speed and Acceleration

Conclusion

Acquisition protocol

Results

Conclusion

Field Illumination Uniformity

Acquisition protocol

Results

Conclusion

Template

Acquisition protocol

Results

Conclusion

Template

Acquisition protocol

Results

Conclusion

Template

Acquisition protocol

Results

Conclusion

References

The information provided here is inspired by the following references:

doi.org/10.17504/protocols.io.5jyl853ndl2w/v2

https://doi.org/10.1083/jcb.202107093

- No labels