- Created by Nicolas Stifani, last updated on Dec 25, 2024 46 minute read

You are viewing an old version of this page. View the current version.

Compare with Current View Page History

« Previous Version 77 Next »

This page provides a practical guide for microscope quality control. By following the outlined steps, utilizing the provided template files, and running the included scripts, you will have everything needed to easily generate comprehensive report on your microscope's performance.

Equipment used

- Thorlabs Power Meter (PM400) and sensor (S170C)

- Thorlabs Fluorescent Slides (FSK5)

- TetraSpeck™ Fluorescent Microspheres Size Kit (mounted on slide) ThermoFisher (T14792)

Software used

- FIJI FIJI

- MetroloJ_QC Plugin for FIJI MetroloJ_QC

- iText Plugin for FIJI iText

- R R from the CRAN R Project

- I typically use RStudio the integrated development environment (IDE) for R

Please note that during quality control, you may, and likely will, encounter defects or unexpected behavior. This practical guide is not intended to assist with investigating or resolving these issues. With that said, we wish you the best of luck and are committed to providing support. Feel free to reach out to us at microscopie@cib.umontreal.ca

Illumination Warmup Kinetic

When starting light sources, they require time to reach a stable and steady state. This duration is referred to as the warm-up period. To ensure accurate performance, it is essential to record the warm-up kinetics at least once a year to precisely define this period. For a detailed exploration of illumination stability, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Acquisition protocol

Place a power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

Center the sensor with the objective to ensure proper alignment.

Zero the sensor to ensure accurate readings.

Using your power meter controller (e.g., Thorlabs PM400) or compatible software, select the wavelength of the light source you want to monitor.

Turn on the light source and immediately record the power output over time until it stabilizes.

I personally record every 10 seconds for 24 hours and stops when it has been stable for 1 h.

- Repeat steps 3 to 5 for each light source you want to monitor

Keep the light source turned on at all times. Depending on your hardware, the light source may remain continuously on or be automatically shut down by the software when not in use.

Results

Use the provided spreadsheet template, Illumination_Warmup Kinetic_Template.xlsx, and fill in the orange cells to visualize your results. For each light source, plot the measured power output (in mW) against time to analyze the data.

Calculate the relative power using the formula: Relative Power = (Power / Max Power). Then, plot the Relative Power (%) against time to visualize the data.

We observe some variability in the power output for the 385 nm light source.

To assess the stability:

Define a Stability Duration Window:

Select a time period (e.g., 10 minutes) during which the power output should remain stable.Specify a Maximum Coefficient of Variation (CV) Threshold:

Determine an acceptable variability limit for the selected window (e.g., 0.01%).Calculate the Coefficient of Variation (CV):

Use the formula: CV = (Standard Deviation / Mean)

Compute the CV for the specified stability duration window.Visualize Stability:

Plot the calculated CV over time to analyze the stability of the power output.

We observe that the light sources stabilize quickly, within less than 10 minutes, while the 385 nm light source takes approximately 41 minutes to reach stability.

Report the results in a table

| 385nm | 475nm | 555nm | 630nm | |

| Stabilisation time (Max CV 0.01% for 10 min) | 41 | 3 | 3 | 8 |

| Stability Factor (%) Before Warmup | 99.7% | 99.9% | 100.0% | 100.0% |

| Stability Factor (%) After Warmup | 100.0% | 100.0% | 100.0% | 99.9% |

Selected Stability Duration Window (min): 10 min and Maximum Coefficient of Variation: 0.01%

Conclusion

The illumination warm-up time for this instrument is approximately 40 minutes. This duration is essential for ensuring accurate quantitative measurements, as the Coefficient of Variation (CV) threshold is strict, with a maximum allowable variation of 0.01% within a 10-minute window.

Illumination Maximum Power Output

This measure assesses the maximum power output of each light source, considering both the quality of the light source and the components along the light path. Over time, we expect a gradual decrease in power output due to the aging of hardware, including the light source and other optical components. These measurements will also be used to track the performance of the light sources over their lifetime (see Long-Term Illumination Stability section). For a detailed exploration of illumination properties, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Acquisition protocol

- Warm up the light sources for the required duration (see previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Center the sensor with the objective to ensure proper alignment.

- Zero the sensor to ensure accurate readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the wavelength of the light source you wish to monitor.

- Turn the light source on to 100% power.

- Record the average power output over 10 seconds.I personally re-use the data collected during the warm-up kinetic experiment (when the power is stable) for this purpose.

- Repeat steps 5 to 7 for each light source and wavelength you wish to monitor.

Results

Fill in the orange cells in the Illumination_Maximum Power Output_Template.xlsx spreadsheet to visualize your results. For each light source, plot the measured maximum power output (in mW).

Plot the measured maximum power output (in mW) and compare it to the manufacturer’s specifications. Calculate the Relative Power using the formula: Relative Power = (Measured Power / Manufacturer Specifications) and plot the relative power for each light source

Report the results in a table

| Manufacturer Specifications (mW) | Measurements 2024-11-22 (mW) | Relative Power (%) | |

| 385nm | 150.25 | 122.2 | 81% |

| 470nm | 110.4 | 95.9 | 87% |

| 555nm | 31.9 | 24 | 75% |

| 630nm | 52 | 39.26 | 76% |

Conclusion

This instrument provides 80% of the power specified by the manufacturer. These results are consistent, as the manufacturer’s specifications are based on a different objective, and likely different filters and mirrors, which can affect the measured power output.

Illumination Stability

The light sources used on a microscope should remain constant or at least stable over the time scale of an experiment. For this reason, illumination stability is recorded across four different time scales:

- Real-time Illumination Stability: Continuous recording for 1 minute. This represents the duration of a z-stack acquisition.

- Short-term Illumination Stability: Recording every 1-10 seconds for 5-15 minutes. This represents the duration needed to acquire several images.

- Mid-term Illumination Stability: Recording every 10-30 seconds for 1-2 hours. This represents the duration of a typical acquisition session or short time-lapse experiments. For longer time-lapse experiments, a longer duration may be used.

- Long-term Illumination Stability: Recording once a year or more over the lifetime of the instrument.

For a detailed exploration of illumination stability, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Real-time Illumination Stability

Acquisition protocol

- Warm up the light sources for the required duration (see previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Center the sensor with the objective to ensure proper alignment.

- Zero the sensor to ensure accurate readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the wavelength of the light source you wish to monitor.

- Turn the light source on to 100% power.

- Record the power output every 100 ms for 1 minute. For some microscope dedicated to a fast imaging it might be required to record stability at a faster rate. The THorlab S170C sensor can record at 1 kHz

I personally acquire this data immediately after the warm-up kinetic experiment, without turning off the light source.

- Repeat steps 5 to 7 for each light source and wavelength you wish to monitor.

Results

Fill in the orange cells in the Illumination_Stability_Template.xlsx spreadsheet to visualize your results. For each light source, plot the measured power output (in mW) over time.

Calculate the relative power using the formula: Relative Power = (Power / Max Power). Then, plot the Relative Power (%) over time.

Calculate the Stability Factor (S) using the formula: S (%) = 100 × (1 - (Pmax - Pmin) / (Pmax + Pmin)). Also, calculate the Coefficient of Variation (CV) using the formula: CV = Standard Deviation / Mean. Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 99.99% | 0.002% |

| 475nm | 99.99% | 0.002% |

| 555nm | 99.97% | 0.004% |

| 630nm | 99.99% | 0.002% |

From the Stability Factor results, we observe that the difference between the maximum and minimum power is less than 0.03%. Additionally, the Coefficient of Variation indicates that the standard deviation is less than 0.004% of the mean value, demonstrating excellent power stability.

Conclusion

The light sources exhibit a very high stability, >99.9% during a 1-minute period.

Short-term Illumination Stability

Acquisition protocol

- Warm up the light sources for the required duration (see previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Ensure the sensor is properly centered with the objective for accurate measurements.

- Zero the sensor to ensure precise readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the desired wavelength of the light source.

- Turn on the light source to 100% intensity.

- Record the power output every 10 seconds for 15 minutes.

I personally re-use the data collected during the warm-up kinetic experiment (when the power is stable) for this purpose.

- Repeat steps 5 to 7 for each light source you wish to monitor.

Results

Fill in the orange cells in the Illumination_Stability_Template.xlsx spreadsheet to visualize your results. For each light source, plot the measured power output (in mW) over time.

Calculate the relative power using the formula: Relative Power = Power / Max Power. Then, plot the Relative Power (%) over time.

Calculate the Stability Factor (S) using the formula: S (%) = 100 × (1 - (Pmax - Pmin) / (Pmax + Pmin)). Also, calculate the Coefficient of Variation (CV) using the formula: CV = Standard Deviation / Mean. Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 100.00% | 0.000% |

| 475nm | 100.00% | 0.002% |

| 555nm | 100.00% | 0.003% |

| 630nm | 99.99% | 0.004% |

From the Stability Factor results, we observe that the difference between the maximum and minimum power is less than 0.01%. Additionally, the Coefficient of Variation indicates that the standard deviation is less than 0.004% of the mean value, demonstrating excellent power stability.

Conclusion

The light sources exhibit high stability, maintaining >99.9% stability during a 15-minute period.

Mid-term Illumination Stability

Acquisition protocol

- Warm up the light sources for the required duration (see previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Ensure the sensor is properly centered with the objective for accurate measurements.

- Zero the sensor to ensure precise readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the desired wavelength of the light source.

- Turn on the light source to 100% intensity.

- Record the power output every 10 seconds for 1 hour.

I personally re-use the data collected during the warmup kinetic experiment.

- Repeat steps 5 to 7 for each light source you wish to monitor

Results

Fill in the orange cells in the Illumination_Stability_Template.xlsx spreadsheet to visualize your results. For each light source, plot the measured power output (in mW) over time to assess stability.

Calculate the relative power using the formula: Relative Power = Power / Max Power. Then, plot the Relative Power (%) over time.

Calculate the Stability Factor (S) using the formula: S (%) = 100 × (1 - (Pmax - Pmin) / (Pmax + Pmin)). Also, calculate the Coefficient of Variation (CV) using the formula: CV = Standard Deviation / Mean. Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 99.98% | 0.013% |

| 475nm | 99.98% | 0.011% |

| 555nm | 99.99% | 0.007% |

| 630nm | 99.97% | 0.020% |

From the Stability Factor results, we observe that the difference between the maximum and minimum power is less than 0.03%. Additionally, the Coefficient of Variation indicates that the standard deviation is less than 0.02% of the mean value, demonstrating excellent power stability.

Conclusion

The light sources exhibit exceptional stability, maintaining a performance of >99.9% during a 1-hour period.

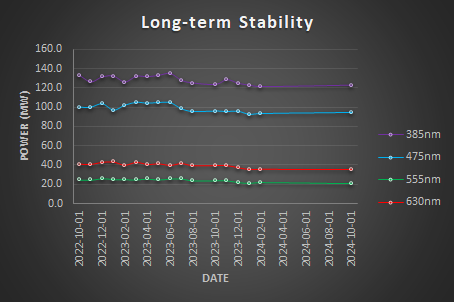

Long-term Illumination Stability

Long-term illumination stability measures the power output over the lifetime of the instrument. Over time, we expect a gradual decrease in power output due to the aging of hardware, including the light source and other optical components. These measurements are not an experiment per se but it is the measurement of the maximum power output over time.

Acquisition protocol

- Warm up the light sources for the required duration (see previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Center the sensor with the objective to ensure proper alignment.

- Zero the sensor to ensure accurate readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the wavelength of the light source you wish to monitor.

- Turn the light source on to 100% power.

- Record the average power output over 10 seconds.I personally re-use the data collected for the maximal power output section and plot it over time.

- Repeat steps 5 to 7 for each light source and wavelength you wish to monitor.

Results

Fill in the orange cells in the Illumination_Stability_Template.xlsx spreadsheet to visualize your results. For each light source, plot the measured power output (in mW) over time to assess the stability of the illumination.

Calculate the relative power using the formula: Relative Power = Power / Max Power. Then, plot the Relative Power (%) over time.

Calculate the Relative PowerSpec by comparing the measured power to the manufacturer’s specifications using the following formula: Relative PowerSpec = Power / PowerSpec. Then, plot the Relative PowerSpec (% Spec) over time.

We expect a gradual decrease in power output over time due to the aging of hardware. Light sources should be replaced when the Relative PowerSpec falls below 50%.

Reports the results in a table.

| Stability Factor | Coefficient of Variation | |

| 385nm | 94.51% | 3.49% |

| 475nm | 93.59% | 4.42% |

| 555nm | 88.96% | 6.86% |

| 630nm | 89.46% | 6.71% |

Conclusion

The light sources are somehow stable over the last 2 years but a decrease in the maximum power output is seen.

Illumination Stability Conclusions

Real-time 1 min | Short-term 15 min | Mid-term 1 h | |

385nm | 99.99% | 100.00% | 99.98% |

475nm | 99.99% | 100.00% | 99.98% |

555nm | 99.97% | 100.00% | 99.99% |

630nm | 99.99% | 99.99% | 99.97% |

The light sources are highly stable (>99.9%).

Illumination Input-Output Linearity

This measure compares the power output as the input varies. A linear relationship is expected between the input and the power output. For a detailed exploration of illumination linearity, refer to the Illumination Power, Stability, and Linearity Protocol by the QuaRep Working Group 01.

Acquisition protocol

- Warm up the light sources for the required duration (refer to the previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Ensure the sensor is properly centered with the objective for accurate measurements.

- Zero the sensor to ensure precise readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the wavelength of the light source you wish to monitor.

- Turn on the light source and adjust its intensity to 0%, then incrementally increase to 10%, 20%, 30%, and so on, up to 100%.I typically collect this data immediately after the warm-up kinetic phase and once the real-time power stability data has been recorded.

- Record the power output corresponding to each input level.

- Repeat steps 5 to 7 for each light source you wish to monitor

Results

Fill in the orange cells in the Illumination_Linearity_Template.xlsx spreadsheet to visualize your results. For each light source, plot the measured power output (in mW) as a function of the input (%).

Calculate the Relative Power using the formula: Relative Power = Power / MaxPower. Then, plot the Relative Power (%) as a function of the input (%).

Determine the equation for each curve, which is typically a linear relationship of the form: Output = K × Input. Report the slope (K) and the coefficient of determination (R²) for each curve in a table.

Illumination Input-Output Linearity | ||

Slope | R2 | |

385nm | 0.9969 | 1 |

475nm | 0.9984 | 1 |

555nm | 1.0012 | 1 |

630nm | 1.0034 | 1 |

The slopes demonstrate a nearly perfect linear relationship between the input and the measured output power, with values very close to 1. The coefficient of determination (R²) indicates a perfect linear fit, showing no deviation from the expected relationship.

Conclusion

The light sources are highly linear.

Objectives and Cubes Transmittance

Since we are using a power meter, we can easily assess the transmittance of the objectives and filter cubes. This measurement compares the power output when different objectives and filter cubes are in the light path. It evaluates the transmittance of each objective and compares it with the manufacturer’s specifications. This method can help detect defects or dirt on the objectives. It can also verify the correct identification of the filters installed in the microscope.

Objectives Transmittance

Acquisition protocol

- Warm up the light sources for the required duration (refer to the previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Ensure the sensor is properly centered with the objective.

- Zero the sensor to ensure accurate readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the wavelength of the light source you wish to monitor.

- Turn on the light source to 100% intensity.

- Record the power output for each objective, as well as without the objective in place.

I typically collect this data after completing the warm-up kinetic phase, followed by the real-time power stability measurements, and immediately after recording the power output linearity.

- Repeat steps 5 to 7 for each light source and wavelength

Results

Fill in the orange cells in the Objective and cube transmittance_Template.xlsx spreadsheet to visualize your results. For each objective, plot the measured power output (in mW) as a function of the wavelength (in nm).

Calculate the Relative Transmittance using the formula: Relative Transmittance = Power / PowerNoObjective. Then, plot the Relative Transmittance (%) as a function of the wavelength (in nm).

Calculate the average transmittance for each objective and report the results in a table. Compare the average transmittance to the specifications provided by the manufacturer to assess performance.

| Average Transmittance | Specifications [470-630] | Average Transmittance | |

| 2.5x-0.075 | 77% | >90% | 84% |

| 10x-0.25-Ph1 | 60% | >80% | 67% |

| 20x-0.5 Ph2 | 62% | >80% | 68% |

| 63x-1.4 | 29% | >80% | 35% |

The measurements are generally close to the specifications, with the exception of the 63x-1.4 objective. This deviation is expected, as the 63x objective has a smaller back aperture, which reduces the amount of light it can receive. Additionally, you can compare the shape of the transmittance curves to further assess performance.

Conclusion

The objectives are transmitting light properly.

Cubes Transmittance

Acquisition protocol

- Warm up the light sources for the required duration (refer to the previous section).

- Place the power meter sensor (e.g., Thorlabs S170C) on the microscope stage.

- Ensure the sensor is properly centered with the objective.

- Zero the sensor to ensure accurate readings.

- Using your power meter controller (e.g., Thorlabs PM400) or software, select the wavelength of the light source you wish to monitor.

- Turn on the light source to 100% intensity.

- Record the power output for each filter cube.

I typically collect this data after completing the warm-up kinetic phase, followed by the real-time power stability measurements, and immediately after recording the power output linearity.

- Repeat steps 5 to 7 for each light source and wavelength

Results

Fill in the orange cells in the Objective and cube transmittance_Template.xlsx spreadsheet to visualize your results. For each filter cube, plot the measured power output (in mW) as a function of the wavelength (in nm).

Calculate the Relative Transmittance using the formula: Relative Transmittance = Power / PowerMaxFilter. Then, plot the Relative Transmittance (%) as a function of the wavelength (in nm).

Calculate the average transmittance for each filter at the appropriate wavelengths and report the results in a table.

| 385 | 475 | 555 | 590 | 630 | |

| DAPI/GFP/Cy3/Cy5 | 100% | 100% | 100% | 100% | 100% |

| DAPI | 14% | 0% | 0% | 8% | 0% |

| GFP | 0% | 47% | 0% | 0% | 0% |

| DsRed | 0% | 0% | 47% | 0% | 0% |

| DHE | 0% | 0% | 0% | 0% | 0% |

| Cy5 | 0% | 0% | 0% | 0% | 84% |

The DAPI cube transmits only 14% of the excitation light compared to the Quad Band Pass DAPI/GFP/Cy3/Cy5. While it is still usable, it will provide a low signal. This is likely because the excitation filter within the cube does not match the light source properly. Since an excitation filter is already included in the light source, the filter in this cube could be removed.

The GFP and DsRed cubes transmit 47% of the excitation light compared to the Quad Band Pass DAPI/GFP/Cy3/Cy5, and they are functioning properly.

The DHE cube does not transmit any light from the Colibri. This cube may need to be removed and stored.

The Cy5 cube transmits 84% of the excitation light compared to the Quad Band Pass DAPI/GFP/Cy3/Cy5, and it is working properly.

Conclusion

Actions to be Taken:

- Remove the excitation filter from the DAPI cube, as it does not properly match the light source and is redundant with the excitation filter already included in the light source.

- Remove and store the DHE cube, as it does not transmit any light from the Colibri and is no longer functional.

We are done with the powermeter .

Field Illumination Uniformity

Having confirmed the stability of our light sources and verified that the optical components (objectives and filter cubes) are transmitting light effectively, we can now proceed to evaluate the uniformity of the illumination. This step assesses how evenly the illumination is distributed. For a comprehensive guide on illumination uniformity, refer to the Illumination Uniformity by the QuaRep Working Group 03.

Acquisition protocol

- Place a fluorescent plastic slide (Thorlabs FSK5) onto the stage.

- Center the slide and the objective.

- Adjust the focus to align with the surface of the slide.

I typically use a red fluorescent slide and focus on a scratch mark on its surface for alignment.

Slightly adjust the focus deeper into the slide to minimize the visibility of dust, dirt, and scratches.

Modify the acquisition parameters to ensure the image is properly exposed.

I typically use the auto-exposure feature, aiming for a targeted intensity of 30%.

- Capture a multi-channel image.

- Repeat steps 5 and 6 for each objective and filter combination.

Results

You should have acquired several multi-channel images that now need processing to yield meaningful results. To process them, use the Field Illumination analysis feature of the MetroloJ_QC plugin for FIJI. For more information about the MetroloJ_QC plugin please refer to manual available at the MontpellierRessourcesImagerie on GitHub.

- Open FIJI.

- Load your image by dragging it into the FIJI bar.

- Launch the MetroloJ_QC plugin by navigating to Plugins > MetroloJ QC.

- Click on Field Illumination Report.

- Enter a title for your report.

- Type in your name.

- Click Filter Parameters and input the filter's names, excitation, and emission wavelengths.

Check Remove Noise using Gaussian Blur.

Enable Apply Tolerance to the Report and reject uniformity and accuracy values below 80%.

Click File Save Options.

Select Save Results as PDF Reports

Select Save Results as spreadsheets.

Click OK.

Repeat steps 4 through 13 for each image you have acquired.

This will generate detailed results stored in a folder named Processed. The Processed folder will be located in the same directory as the original images, with each report and result saved in its own sub-folder.

Use the following R script Process Field Uniformity Results.R to merge all _results.xls files into a single file.

This script will load all _results.xls files located in the "Input" folder on your desktop or prompt you to select an input folder.

It will compile and merge data generated by the MetroloJ_QC Field Illumination analysis, including metrics such as Uniformity and Centering Accuracy for each channel from every file.

Additionally, it will split the filenames using the _ character and create columns named Variable-001, Variable-002, etc. This will help in organizing the data if the filenames are formatted as Objective_VariableA_VariableB, for example.

The combined data will be saved as Combined_output.csv in the Output folder on your desktop.

# Clear the workspace

rm(list = ls())

# Set default input and output directories

default_input_dir <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Input") # Default to "Input" on Desktop

InputFolder <- default_input_dir # Use default folder

# Prompt user to select a folder (optional, currently commented)

InputFolder <- choose.dir(default = default_input_dir, caption = "Select an Input Folder") # You may comment this line if you want to directly use the InputFolder on your desktop

# If no folder is selected, fall back to the default

if (is.na(InputFolder)) {

InputFolder <- default_input_dir

}

# Specify the Output folder (this is a fixed folder on the Desktop)

OutputFolder <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Output")

if (!dir.exists(OutputFolder)) dir.create(OutputFolder, recursive = TRUE)

# List all XLS files ending with "_results.xls" in the folder and its subfolders

xls_files <- list.files(path = InputFolder, pattern = "_results.xls$", full.names = TRUE, recursive = TRUE)

if (length(xls_files) == 0) stop("No _results.xls files found in the selected directory.")

# Define the header pattern to search for

header_pattern <- "Filters combination/set.*Uniformity.*Field Uniformity.*Centering Accuracy.*Image.*Coef. of Variation.*Mean c fit value"

# Initialize a list to store tables

all_tables <- list()

# Loop over all XLS files

for (file in xls_files) {

# Read the file as raw lines (since it's tab-separated)

lines <- readLines(file)

# Find the line that matches the header pattern

header_line_index <- grep(header_pattern, lines)

if (length(header_line_index) == 0) {

cat("No matching header found in file:", file, "\n")

next # Skip this file if the header isn't found

}

# Extract the table starting from the header row

start_row <- header_line_index[1]

# The table might end at an empty line, so let's find the next empty line after the header

end_row <- min(grep("^\\s*$", lines[start_row:length(lines)]), na.rm = TRUE) + start_row - 1

if (is.na(end_row)) end_row <- length(lines) # If no empty line found, use the last line

# Extract the table rows (lines between start_row and end_row)

table_lines <- lines[start_row:end_row]

# Convert the table lines into a data frame

table_data <- read.table(text = table_lines, sep = "\t", header = TRUE, stringsAsFactors = FALSE)

# Add the filename as the first column (without extension)

filename_no_extension <- tools::file_path_sans_ext(basename(file))

table_data$Filename <- filename_no_extension

# Split the filename by underscores and add as new columns

filename_parts <- unlist(strsplit(filename_no_extension, "_"))

for (i in 1:length(filename_parts)) {

table_data[[paste0("Variable-", sprintf("%03d", i))]] <- filename_parts[i]

}

# Rename columns based on the exact names found in the data

colnames(table_data) <- gsub("Filters.combination.set", "Channel", colnames(table_data))

colnames(table_data) <- gsub("Uniformity....", "Uniformity (%)", colnames(table_data))

colnames(table_data) <- gsub("Field.Uniformity....", "Field Uniformity (%)", colnames(table_data))

colnames(table_data) <- gsub("Centering.Accuracy....", "Centering Accuracy (%)", colnames(table_data))

colnames(table_data) <- gsub("Coef..of.Variation", "CV", colnames(table_data))

# Remove the "Mean.c.fit.value" column if present

colnames(table_data) <- gsub("Mean.c.fit.value", "", colnames(table_data))

# Drop any columns that were renamed to an empty string

table_data <- table_data[, !grepl("^$", colnames(table_data))]

# Divide the relevant columns by 100 to convert percentages into decimal values

table_data$`Uniformity (%)` <- table_data$`Uniformity (%)` / 100

table_data$`Field Uniformity (%)` <- table_data$`Field Uniformity (%)` / 100

table_data$`Centering Accuracy (%)` <- table_data$`Centering Accuracy (%)` / 100

# Store the table in the list with the file name as the name

all_tables[[basename(file)]] <- table_data

}

# Check if any tables were extracted

if (length(all_tables) == 0) {

stop("No tables were extracted from the files.")

}

# Optionally, combine all tables into a single data frame (if the structures are consistent)

combined_data <- do.call(rbind, all_tables)

# View the combined data

#head(combined_data)

# Optionally, save the combined data to a CSV file

output_file <- file.path(OutputFolder, "Field-Illumination_Combined-Data.csv")

write.csv(combined_data, output_file, row.names = FALSE)

cat("Combined data saved to:", output_file, "\n")

The following spreadsheet provides a dataset that can be manipulated with a pivot table to generate informative graphs and statistics Illumination_Uniformity_Template.xlsx. Plot the uniformity and centering accuracy for each objective.

| Objective | Uniformity | Centering Accuracy |

| 2x | 97.5% | 92.7% |

| 10x | 97.0% | 94.5% |

| 20x | 97.3% | 97.1% |

| 63x | 96.6% | 96.7% |

Plot the uniformity and centering accuracy for each filter set.

| Filter | Uniformity | Centering Accuracy |

| DAPI | 98.3% | 99.4% |

| DAPIc | 95.8% | 84.9% |

| GFP | 98.1% | 99.1% |

| GFPc | 96.5% | 93.3% |

| Cy3 | 97.6% | 96.5% |

| Cy3c | 96.8% | 97.9% |

| Cy5 | 97.0% | 99.6% |

| Cy5c | 96.7% | 91.3% |

This specific instrument has a quad-band filter as well as individual filter cubes. We can plot the uniformity and centering accuracy per filter types.

| Filter Type | Uniformity | Centering Accuracy |

Quad band | 97.7% | 98.7% |

| Single band | 96.5% | 91.8% |

Conclusion

The uniformity and centering accuracy are excellent across all objectives and filters, consistently exceeding 90%. However, the single-band filter cubes exhibit slightly lower uniformity and centering accuracy compared to the quad-band filter cube.

XYZ Drift

This experiment evaluates the stability of the system in the XY and Z directions. As noted earlier, when an instrument is started, it requires a warmup period to reach a stable steady state. To determine the duration of this phase accurately, it is recommended to record a warmup kinetic at least once per year.

Acquisition protocol

Place 4 µm diameter fluorescent beads (TetraSpec Fluorescent Microspheres Size Kit, mounted on a slide) on the microscope stage.

Center an isolated bead under a high-NA dry objective.

- Crop the acquisition area to only visualize one bead but keep it large enough to anticipate a potential drift along the X and Y axes (100 um FOV should be enough)

Select an imaging channel appropriate for the fluorescent beads

I typically use the Cy5 channel which is very bright and resistant to bleaching, yet this channel has a lower resolution, but it does not really matter here

Acquire a large Z-stack at 1-minute intervals for a duration of 24 hours.

To ensure accurate measurements, it is essential to account for potential drift along the Z-axis by acquiring a Z-stack that is substantially larger than the visible bead size. I typically acquire a 40 µm Z-stack.

Results

- Open your image in FIJI

- If necessary, crop the image to focus on a single bead for better visualization.

- Use the TrackMate plugin included in FIJI to detect and track spots over time Plugins\Tracking\TrackMate.

- Apply Difference of Gaussians (DoG) spot detection with a detection size of 4 µm

- Enable sub-pixel localization for increased accuracy

- Click Preview to visualize the spot detection

- Set a quality threshold (click and slide) high enough to detect a single spot per frame

- Click Next and follow the detection and tracking process

- Save the detected Spots coordinates as a CSV file for further analysis

Open the spreadsheet template XYZ Drift Kinetic_Template.xlsx and fill in the orange cells. Copy and paste the XYZT and Frame columns from the TrackMate spots CSV file into the corresponding orange columns in the spreadsheet. Enter the numerical aperture (NA) and emission wavelength used during the experiment. Calculate the relative displacement in X, Y, and Z using the formula: Relative Displacement = Position - PositionInitial. Finally, plot the relative displacement over time to visualize the system's drift.

Identify visually the time when the displacement is lower than the resolution of the system. On this instrument it takes 120 min to reach its stability. Calculate the velocity, Velocity = (Displacement2-Displacement1)/T2-T1) and plot the velocity over time.

Calculate the average velocity before and after stabilisation and report the results in a table.

| Objective NA | 0.5 |

| Wavelength (nm) | 705 |

| Resolution (nm) | 705 |

| Stabilisation time (min) | 122 |

| Average velocity Warmup (nm/min) | 113 |

| Average velocity System Ready (nm/min) | 14 |

Conclusion

The warmup time for this instrument is approximately 2 hours. After the warmup period, the average displacement velocity is 14 nm/min, which falls within an acceptable range.

XYZ Repositioning Dispersion

This experiment evaluates how accurate is the system in XY by measuring the dispersionof repositioning. Several variables can affect repositioning: i) Time, ii) Traveled distance, iii) Speed and iv) acceleration. For a detailed exploration of Stage repositioning refer to the XY Repositioning Protocol by the QuaRep Working Group 06.

Acquisition protocol

Place 4 µm diameter fluorescent beads (TetraSpec Fluorescent Microspheres Size Kit, mounted on a slide) on the microscope stage.

Center an isolated bead under a high-NA dry objective.

- Crop the acquisition area to only visualize one bead but keep it large enough to anticipate a potential drift along the X and Y axes (100 um FOV should be enough)

Select an imaging channel appropriate for the fluorescent beads

I typically use the Cy5 channel which is very bright and resistant to bleaching, yet this channel has a lower resolution.

Acquire a Z-stack at positions separated by 0 um (Drift), 1000 um and 10 000 um in both X and Y direction.

Repeat the acquisition 20 times

Acquire 3 datasets for each condition

- Your stage might have a smaller range!

- Lower the objectives during movement to avoid damage

Results

- Open your image in FIJI

- If necessary, crop the image to focus on a single bead for better visualization.

- Use the TrackMate plugin included in FIJI to detect and track spots over time Plugins\Tracking\TrackMate.

- Apply Difference of Gaussians (DoG) spot detection with a detection size of 4 µm

- Enable sub-pixel localization for increased accuracy

- Click Preview to visualize the spot detection

- Set a quality threshold (click and slide) high enough to detect a single spot per frame

- Click Next and follow the detection and tracking process

- Save the detected Spots coordinates as a CSV file for further analysis

Open the spreadsheet template XYZ_Repositioning Dispersion_Template.xlsx and fill in the orange cells. Copy and paste the XYZT and Frame columns from the TrackMate spots CSV file into the corresponding orange columns in the spreadsheet. Enter the numerical aperture (NA) and emission wavelength used during the experiment. Calculate the relative position in X, Y, and Z using the formula: Relative PositionRelative = Position - PositionInitial. Finally, plot the relative position over time to visualize the system's stage repositioning dispersion.

We observe an initial movement in X and Y that stabilises. Calculate the displacement 2D Displacement = Sqrt( (X2-X1)2 + (Y2-Y1)2) ) and plot the 2D displacement over time. Calculate the resolution of your imaging configuration, Lateral Resolution = LambdaEmission / 2*NA and plot the resolution over time (constant).

TThis experiment shows a significant initial displacement between Frame 0 and Frame 1, ranging from 1000 nm to 400 nm, which decreases to 70 nm by Frame 2. To quantify this variation, calculate the dispersion for each parameter using the formula: Dispersion = Standard Deviation (Value). Report the resultsin a table.

| Traveled Distance (mm) | 0 mm | 1 mm | 10 mm |

| X Dispersion (nm) | 4 | 188 | 121 |

| Y Dispersion (nm) | 4 | 141 | 48 |

| Z Dispersion (nm) | 10 | 34 | 53 |

| Repositioning Dispersion 3D (nm) | 6 | 227 | 91 |

| Repositioning Dispersion 2D (nm) | 2 | 226 | 90 |

Conclusion

The system has a stage dispersion of 230 nm. The results are higher than expected because of the initial shift in the first frame that eventually stabilizes. Excluding the first frame significantly improves the measurements, reducing the repositioning dispersion to 40 nm. Further investigation is required to understand the underlying cause.

| Traveled Distance (um) | 0 mm | 1 mm | 10 mm |

| X Dispersion (nm) | 3 | 28 | 52 |

| Y Dispersion (nm) | 3 | 68 | 35 |

| Z Dispersion (nm) | 10 | 26 | 40 |

| Repositioning Dispersion 3D (nm) | 6 | 43 | 36 |

| Repositioning Dispersion 2D (nm) | 2 | 40 | 36 |

Further Investigation

We observed a significant shift in the first frame, which was unexpected and invites further investigation. These variables can affect repositioning dispersion: i) Traveled distance, ii) Speed, iii) Acceleration, iv) Time, and v) Environment. We decided to test the first three.

Methodology

To test if these variables have a significant impact on the repositioning, we followed the XYZ repositioning dispersion protocol with the following parameters:

- Distances: 0um, 1um, 10um, 1000um, 10 000um, 30 000um

- Speed: 10%, 100%

- Acceleration: 10%,100%

- For each conditions 3 datasets were acquired

This experimental protocol generated a substantial number of images. To process them automatically in ImageJ/FIJI using the TrackMate plugin, we use the following script Stage-Repositioning_Batch-TrackMate.py

import os

import sys

import csv

from ij import IJ, WindowManager, Prefs

from ij.gui import GenericDialog

from java.io import File

from javax.swing import JOptionPane, JFileChooser # Import JOptionPane and JFileChooser for dialogs

from fiji.plugin.trackmate import Model, Settings, TrackMate, SelectionModel, Logger

from fiji.plugin.trackmate.detection import DogDetectorFactory

from fiji.plugin.trackmate.tracking.jaqaman import SparseLAPTrackerFactory

from fiji.plugin.trackmate.features import FeatureFilter

from fiji.plugin.trackmate.features.track import TrackIndexAnalyzer

from fiji.plugin.trackmate.gui.displaysettings import DisplaySettingsIO

from fiji.plugin.trackmate.gui.displaysettings.DisplaySettings import TrackMateObject

from fiji.plugin.trackmate.visualization.table import TrackTableView

from fiji.plugin.trackmate.visualization.hyperstack import HyperStackDisplayer

from loci.plugins import BF

from loci.plugins.in import ImporterOptions

# Ensure UTF-8 encoding

reload(sys)

sys.setdefaultencoding('utf-8')

# Default settings in case there's no previous config file

TrackMateSettingsDefault = {

'subpixel_localization': True,

'spot_diameter': 4.0, # Default 0.5 microns

'threshold_value': 20.904, # Default threshold value

'apply_median_filtering': False

}

# Define keys for preferences

PREFS_KEY_SUBPIXEL = "BatchTrackmate.subpixel_localization"

PREFS_KEY_DIAMETER = "BatchTrackmate.spot_diameter"

PREFS_KEY_THRESHOLD = "BatchTrackmate.threshold_value"

PREFS_KEY_MEDIAN = "BatchTrackmate.apply_median_filtering"

PREFS_KEY_PROCESS_ALL_IMAGES = "BatchTrackmate.process_all_images"

def read_settings_from_prefs():

subpixel_localization_str = Prefs.get(PREFS_KEY_SUBPIXEL, "True")

spot_diameter_str = Prefs.get(PREFS_KEY_DIAMETER, "4.0")

threshold_value_str = Prefs.get(PREFS_KEY_THRESHOLD, "20.904")

apply_median_filtering_str = Prefs.get(PREFS_KEY_MEDIAN, "False")

process_all_images_str = Prefs.get(PREFS_KEY_PROCESS_ALL_IMAGES, "False")

TrackMateSettingsStored = {

'subpixel_localization': subpixel_localization_str.lower() == "true",

'spot_diameter': float(spot_diameter_str),

'threshold_value': float(threshold_value_str),

'apply_median_filtering': apply_median_filtering_str.lower() == "true",

'process_all_images': process_all_images_str.lower() == "true"

}

return TrackMateSettingsStored

def save_settings_to_prefs(TrackMateSettings):

Prefs.set(PREFS_KEY_SUBPIXEL, str(TrackMateSettings['subpixel_localization']))

Prefs.set(PREFS_KEY_DIAMETER, str(TrackMateSettings['spot_diameter']))

Prefs.set(PREFS_KEY_THRESHOLD, str(TrackMateSettings['threshold_value']))

Prefs.set(PREFS_KEY_MEDIAN, str(TrackMateSettings['apply_median_filtering']))

Prefs.set(PREFS_KEY_PROCESS_ALL_IMAGES, str(TrackMateSettings['process_all_images']))

Prefs.savePreferences()

def display_dialog(TrackMateSettings, imp, NbDetectedSpots):

NbTimepoints = imp.getNFrames()

Max_Intensity = imp.getProcessor().getMax()

gd = GenericDialog("Batch TrackMate Parameters")

# Add fields to the dialog, prefill with last saved values

gd.addMessage("=== Detection Settings ===")

gd.addCheckbox("Enable Subpixel Localization", TrackMateSettings['subpixel_localization'])

gd.addNumericField("Spot Diameter (microns):", TrackMateSettings['spot_diameter'], 2, 15, "")

gd.addSlider("Threshold Value:", 0, Max_Intensity, TrackMateSettings['threshold_value'])

gd.addCheckbox("Apply Median Filtering", TrackMateSettings['apply_median_filtering'])

gd.addCheckbox("Process All Images", TrackMateSettings['process_all_images'])

if NbDetectedSpots == False:

NbDetectedSpots = 0

if NbDetectedSpots > NbTimepoints:

gd.addMessage("Nb of detected spots too high. Increase the threshold.")

elif NbDetectedSpots < NbTimepoints:

gd.addMessage("Nb of detected spots too low. Decrease the threshold.")

elif NbDetectedSpots == NbTimepoints:

gd.addMessage("The nb of detected spots matches the nb of timepoints.")

gd.addMessage("Nb of detected spots: " + str(NbDetectedSpots) + ". Nb of Timepoints: " + str(NbTimepoints) + ".")

gd.addButton("Test Detection", lambda event: Test_Detection(event, gd))

# Show the dialog

gd.showDialog()

User_Click = None # Initialize User_Click within the function

if gd.wasOKed():

User_Click = "OK"

elif gd.wasCanceled():

User_Click = "Cancel"

# Get user inputs from the dialog

TrackMateSettingsUser = {}

TrackMateSettingsUser['subpixel_localization'] = gd.getNextBoolean()

TrackMateSettingsUser['spot_diameter'] = gd.getNextNumber()

TrackMateSettingsUser['threshold_value'] = gd.getNextNumber()

TrackMateSettingsUser['apply_median_filtering'] = gd.getNextBoolean()

TrackMateSettingsUser['process_all_images'] = gd.getNextBoolean()

return TrackMateSettingsUser, User_Click, NbDetectedSpots, NbTimepoints

def run_trackmate_detection(imp, TrackMateSettings, SaveCSV):

model = Model()

model.setLogger(Logger.IJ_LOGGER) # Send messages to the ImageJ log window

# Configure TrackMate for this image

trackmate_settings = Settings(imp)

trackmate_settings.detectorFactory = DogDetectorFactory()

trackmate_settings.detectorSettings = {

'DO_SUBPIXEL_LOCALIZATION': TrackMateSettings['subpixel_localization'],

'RADIUS': TrackMateSettings['spot_diameter'] / 2,

'TARGET_CHANNEL': 1,

'THRESHOLD': TrackMateSettings['threshold_value'],

'DO_MEDIAN_FILTERING': TrackMateSettings['apply_median_filtering'],

}

# Configure tracker

trackmate_settings.trackerFactory = SparseLAPTrackerFactory()

trackmate_settings.trackerSettings = trackmate_settings.trackerFactory.getDefaultSettings()

trackmate_settings.trackerSettings['ALLOW_TRACK_SPLITTING'] = True

trackmate_settings.trackerSettings['ALLOW_TRACK_MERGING'] = True

# Add all known feature analyzers to compute track statistics

trackmate_settings.addAllAnalyzers()

# Configure track filters

track_filter = FeatureFilter('TRACK_DISPLACEMENT', 10, False)

trackmate_settings.addTrackFilter(track_filter)

# Instantiate plugin

trackmate = TrackMate(model, trackmate_settings)

# Process

if not trackmate.checkInput():

IJ.log("Error checking input: " + trackmate.getErrorMessage())

return False

if not trackmate.process():

IJ.log("Error during processing: " + trackmate.getErrorMessage())

return False

# Display results

selection_model = SelectionModel(model)

ds = DisplaySettingsIO.readUserDefault()

ds.setTrackColorBy(TrackMateObject.TRACKS, TrackIndexAnalyzer.TRACK_INDEX)

ds.setSpotColorBy(TrackMateObject.TRACKS, TrackIndexAnalyzer.TRACK_INDEX)

displayer = HyperStackDisplayer(model, selection_model, imp, ds)

displayer.render()

displayer.refresh()

# Display the number of detected spots and the number of timepoints

NbDetectedSpots = model.getSpots().getNSpots(True)

if SaveCSV:

# Export spot table as CSV

spot_table = TrackTableView.createSpotTable(model, ds)

filename = imp.getTitle()

output_csv_path = generate_unique_filepath(output_dir, filename, ".csv")

spot_table.exportToCsv(File(output_csv_path))

return NbDetectedSpots

def generate_unique_filepath(directory, base_name, extension):

"""

Generates a unique filename by appending a numeric suffix.

:param directory: The directory where the file will be saved.

:param base_name: The base name of the file (without the suffix and extension).

:param extension: The file extension (e.g., '.txt').

:return: A unique filename with an incremented suffix.

"""

suffix = 1

while True:

filename = "{}_Spots-{:03d}{}".format(base_name, suffix, extension)

full_path = os.path.join(directory, filename)

if not os.path.exists(full_path):

return full_path

suffix += 1

User_Click = None # Initialize User_Click

processed_files = [] # List to keep track of processed files

def Test_Detection(event, gd):

global User_Click

User_Click = "Retry"

gd.dispose() # Close the dialog

def select_folder():

"""

Prompts the user to select a folder using JFileChooser.

:return: The selected folder path or None if no folder was selected.

"""

desktop_dir = os.path.join(os.path.expanduser("~"), "Desktop")

chooser = JFileChooser(desktop_dir)

chooser.setFileSelectionMode(JFileChooser.DIRECTORIES_ONLY)

chooser.setDialogTitle("Choose a directory containing the images to process")

return_value = chooser.showOpenDialog(None)

if return_value == JFileChooser.APPROVE_OPTION:

return chooser.getSelectedFile().getAbsolutePath()

return None

def open_image_with_bioformats(file_path):

"""

Opens an image using Bio-Formats and returns the ImagePlus object.

:param file_path: Path to the image file.

:return: ImagePlus object or None if the file could not be opened.

"""

options = ImporterOptions()

options.setId(file_path)

try:

imps = BF.openImagePlus(options)

if imps is not None and len(imps) > 0:

return imps[0]

else:

IJ.log("No images found in the file: " + file_path)

return None

except Exception as e:

IJ.log("Error opening file with Bio-Formats: " + str(e))

return None

# Main processing loop

folder_path = None

image_from_folder = False

while True:

TrackMateSettingsStored = read_settings_from_prefs()

imp = WindowManager.getCurrentImage()

# If no image is open, prompt the user to select a folder

if imp is None:

folder_path = select_folder()

if folder_path is not None:

image_files = [f for f in os.listdir(folder_path) if f.lower().endswith(('.tif', '.tiff', '.jpg', '.jpeg', '.png', '.czi', '.nd2', '.lif', '.lsm', '.ome.tif', '.ome.tiff'))]

if not image_files:

JOptionPane.showMessageDialog(None, "No valid image files found in the selected folder.", "Error", JOptionPane.ERROR_MESSAGE)

sys.exit(1)

imp = open_image_with_bioformats(os.path.join(folder_path, image_files[0]))

if imp is None:

JOptionPane.showMessageDialog(None, "Failed to open the first image in the folder.", "Error", JOptionPane.ERROR_MESSAGE)

sys.exit(1)

image_from_folder = True

else:

JOptionPane.showMessageDialog(None, "No folder selected. Exiting.", "Error", JOptionPane.ERROR_MESSAGE)

sys.exit(1)

NbDetectedSpots = run_trackmate_detection(imp, TrackMateSettingsStored, SaveCSV=False)

TrackMateSettingsUser, User_Click, NbDetectedSpots, NbTimepoints = display_dialog(TrackMateSettingsStored, imp, NbDetectedSpots)

save_settings_to_prefs(TrackMateSettingsUser)

if User_Click == "OK" and NbDetectedSpots == NbTimepoints and TrackMateSettingsStored == TrackMateSettingsUser:

break

elif User_Click == "Cancel":

IJ.log("User canceled the operation.")

if image_from_folder:

imp.close()

sys.exit(1)

# Create output directory on the user's desktop

output_dir = os.path.join(os.path.expanduser("~"), "Desktop", "Output")

if not os.path.exists(output_dir):

os.makedirs(output_dir)

# Initialize a list to store data for each processed file

processed_data = []

if TrackMateSettingsUser['process_all_images']:

if folder_path is not None:

for image_file in image_files:

imp.close()

imp = open_image_with_bioformats(os.path.join(folder_path, image_file))

if imp is not None:

NbDetectedSpots = run_trackmate_detection(imp, TrackMateSettingsStored, SaveCSV=True)

processed_files.append(image_file)

processed_data.append({

"Filename": image_file,

"NbDetectedSpots": NbDetectedSpots,

"NbTimepoints": imp.getNFrames()

})

imp.close()

else:

image_ids = WindowManager.getIDList()

if image_ids is not None:

for image_id in image_ids:

imp = WindowManager.getImage(image_id)

NbDetectedSpots = run_trackmate_detection(imp, TrackMateSettingsStored, SaveCSV=True)

processed_files.append(imp.getTitle())

processed_data.append({

"Filename": imp.getTitle(),

"NbDetectedSpots": NbDetectedSpots,

"NbTimepoints": imp.getNFrames()

})

else:

NbDetectedSpots = run_trackmate_detection(imp, TrackMateSettingsStored, SaveCSV=True)

processed_files.append(imp.getTitle())

processed_data.append({

"Filename": imp.getTitle(),

"NbDetectedSpots": NbDetectedSpots,

"NbTimepoints": imp.getNFrames()

})

# Display a message dialog at the end of processing

message = "Processing Complete!\nNumber of files processed: {}\nOutput files are saved in: {}".format(len(processed_files), output_dir)

JOptionPane.showMessageDialog(None, message, "Processing Complete", JOptionPane.INFORMATION_MESSAGE)

# Close the first image if it was opened from a folder

if image_from_folder:

imp.close()

# Display summary in the log at the end

IJ.log("=== Summary of Processed Files ===")

for data in processed_data:

IJ.log("Filename: {} | Detected spots: {} | Number of timepoints: {}".format(

data["Filename"], data["NbDetectedSpots"], data["NbTimepoints"]

))

This script automates the process of detecting and tracking spots using the TrackMate plugin for ImageJ/FIJI. To use it:

- Drop the script into the FIJI toolbar and click Run.

If images are already opened, the script will:

- Prompt the user to configure settings, such as enabling subpixel localization, adjusting spot diameter, setting the threshold, and applying median filtering. These settings are either loaded from a previous configuration stored in ImageJ's preferences or set to defaults. It is highly recommended to run TrackMate manually at least once on a representative image to define optimal detection parameters (ideally detecting one spot per frame).

- Allow the user to choose whether to process all images or just the active one.

- For each image:

- Configure the TrackMate detector (using the Difference of Gaussians method) and tracker (SparseLAPTracker).

- Analyze features and filter tracks based on user-defined settings.

- Process the image, track spots, and display results with visualization in the active window.

- Export detected spot data as a uniquely named CSV file in an "Output" directory on the desktop.

- Save user-defined settings to ImageJ's preferences for future use.

- At the end, display a summary dialog indicating the number of processed files and the location of the saved output.

If no image is opened, the script will:

- Prompt the user to select a folder containing images for processing.

- Open the first image and follow the same workflow as above, processing either a single image or all images in the folder as specified by the user.

This script generates a CSV file for each image, which can be aggregated for further analysis using the accompanying R script, XYZ_Repositioning_Processing_Script.R. This R script processes all CSV files in a selected folder and save the file as XYZ_Repositioning_Merged-Data.csv in an "Output" folder on the user desktop for streamlined data analysis.

# Load and install necessary libraries at the beginning

rm(list = ls())

# Check and install required packages if they are not already installed

if (!require(dplyr)) install.packages("dplyr", dependencies = TRUE)

if (!require(stringr)) install.packages("stringr", dependencies = TRUE)

if (!require(ggplot2)) install.packages("ggplot2", dependencies = TRUE)

if (!require(tcltk)) install.packages("tcltk", dependencies = TRUE)

# Load libraries

library(dplyr)

library(stringr)

library(ggplot2)

library(tcltk)

# Set default input and output directories

default_input_dir <- file.path(Sys.getenv("USERPROFILE"), "Desktop") # Default to "Output" on Desktop

InputFolder <- tclvalue(tkchooseDirectory(initialdir = default_input_dir, title = "Select a folder containing CSV files"))

#InputFolder <- default_input_dir # Use default folder

# Specify the Output folder (this is a fixed folder on the Desktop)

OutputFolder <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Output")

if (!dir.exists(OutputFolder)) dir.create(OutputFolder, recursive = TRUE)

# List all CSV files in the folder

csv_files <- list.files(path = InputFolder, pattern = "\\.csv$", full.names = TRUE)

if (length(csv_files) == 0) stop("No CSV files found in the selected directory.")

header <- names(read.csv(csv_files[1], nrows = 1))

# Function to clean the filename

clean_filename <- function(filename) {

filename_parts <- strsplit(filename, " - ")[[1]]

cleaned_filename <- sub("\\.czi", "", filename_parts[1])

cleaned_filename <- sub("_spots", "", cleaned_filename)

cleaned_filename <- sub("\\.csv", "", cleaned_filename)

return(cleaned_filename)

}

# Read and merge all CSV files

merged_data <- csv_files %>%

lapply(function(file) {

data <- read.csv(file, skip = 4, header = FALSE)

colnames(data) <- header

data <- data %>% arrange(FRAME)

# Clean and add source file info

filename <- basename(file)

data$SourceFile <- clean_filename(filename)

# Extract variables from the filename

filename_parts <- strsplit(clean_filename(filename), "_")[[1]]

for (i in seq_along(filename_parts)) {

variable_name <- paste("Variable-", sprintf("%03d", i), sep = "")

data[[variable_name]] <- filename_parts[i]

}

# Add time columns if available

if ("POSITION_T" %in% colnames(data)) {

data$`Time (sec)` <- round(data$POSITION_T, 0)

data$`Time (min)` <- round(data$`Time (sec)` / 60, 2)

}

# Calculate displacement columns (X, Y, Z, 3D, 2D)

if ("POSITION_X" %in% colnames(data)) {

first_value <- data$POSITION_X[data$FRAME == 0][[1]]

data$`X (nm)` <- (data$POSITION_X - first_value) * 1000

}

if ("POSITION_Y" %in% colnames(data)) {

first_value <- data$POSITION_Y[data$FRAME == 0][[1]]

data$`Y (nm)` <- (data$POSITION_Y - first_value) * 1000

}

if ("POSITION_Z" %in% colnames(data)) {

first_value <- data$POSITION_Z[data$FRAME == 0][[1]]

data$`Z (nm)` <- (data$POSITION_Z - first_value) * 1000

}

# Calculate displacement (3D and 2D)

if (all(c("X (nm)", "Y (nm)", "Z (nm)") %in% colnames(data))) {

data$`Displacement 3D (nm)` <- sqrt(

diff(c(0, data$`X (nm)`))^2 +

diff(c(0, data$`Y (nm)`))^2 +

diff(c(0, data$`Z (nm)`))^2

)

data$`Displacement 3D (nm)`[1] <- 0

}

if (all(c("X (nm)", "Y (nm)") %in% colnames(data))) {

data$`Displacement 2D (nm)` <- sqrt(

diff(c(0, data$`X (nm)`))^2 +

diff(c(0, data$`Y (nm)`))^2

)

data$`Displacement 2D (nm)`[1] <- 0

}

return(data)

}) %>%

bind_rows()

# Save the merged data to a new CSV file

output_file <- "XYZ_Repositioning_Merged-Data.csv"

write.csv(merged_data, file = file.path(OutputFolder, output_file), row.names = FALSE)

cat("All CSV files have been merged and saved to", output_file, "\n")

This R code automates the processing of multiple CSV files containing spot tracking data. Specifically, it:

- Installs and loads required libraries

- Sets directories for input (CSV files) and output (merged data) on the user's desktop.

- Lists all CSV files in the input directory and reads in the header from the first CSV file.

- Defines a filename cleaning function to extract relevant metadata from the filenames (e.g., removing extensions, and extracting variables).

- Reads and processes each CSV file:

- Skips initial rows and assigns column names.

- Cleans up filenames and adds them to the dataset.

- Calculates displacement in the X, Y, and Z axes relative to the initial position with the formulat PositionRelative= Position - PositionInitial, and computes both 3D and 2D displacement values with the following formulas: 2D_Displacement = Sqrt( (X2-X1)2 + (Y2-Y1)2)); 3D_Displacement = Sqrt( (X2-X1)2 + (Y2-Y1)2) + (Z2-Z1)2 )

- Merges all the processed data into a single dataframe.

- Saves the results as XYZ_Repositioning_Merged-Data.csv loated in the selected input folder. It can be further processed and summarized with a pivot table as shown in the following spreadsheet XYZ_Repositioning Dispersion_Traveled Distance_Template.xlsx

Using the first frame as a reference we can plot the average XYZ position for each frame.

As observed earlier, there is a significant displacement between Frame 0 and Frame 1, particularly along the X-axis. For this analysis, we will exclude the first two frames and focus on the variables of interest: (i) Traveled distance, (ii) Speed, and (iii) Acceleration.

Repositioning Dispersion: Impact of Traveled Distance

Results

Plot the 2D displacement versus the frame number for each condition of traveled distance.

The data looks good now with the two first frames ignored. Now, we can calculate the average of the standard deviation of the 2D displacement and plot these values against the traveled distance..

We observe a power-law relationship, described by the equation: Repositioning Dispersion = 12.76 x Traveled Distance^0.2573

| Traveled Distance (um) | Repositioning Dispersion (nm) |

| 0 | 5 |

| 1 | 12 |

| 10 | 27 |

| 100 | 31 |

| 1000 | 107 |

| 10000 | 121 |

| 30000 | 175 |

Conclusion

Repositioning Dispersion: Impact of Speed and Acceleration

Results

Plot the average XYZ relative position against the frame for all conditions.

Generate a plot of the 2D displacement as a function of frame number for each combination of Speed and Acceleration conditions. This visualization will help assess the relationship between displacement and time across the different experimental settings.

As noted earlier, there is a significant displacement between Frame 0 and Frame 1, particularly along the X-axis (600 nm) and, to a lesser extent, the Y-axis (280 nm). To refine our analysis, we will exclude the first two frames and focus on the key variables of interest: (i) Speed and (ii) Acceleration. To better understand the system's behavior, we will visualize the average standard deviation of the 2D displacement for each combination of Speed and Acceleration conditions.

Our observations indicate that both Acceleration and Speed contribute to an increase in 2D repositioning dispersion. However, a two-way ANOVA reveals that only Speed has a statistically significant effect on 2D repositioning dispersion. Post-hoc analysis further demonstrates that the dispersion for the Speed-Fast, Acc-High condition is significantly greater than that of the Speed-Low, Acc-Low condition.

| 2D Repositioning Dispersion (nm) | |

| Speed-Slow Acc-Low | 32 |

| Speed-Slow Acc-High | 49 |

| Speed-Fast Acc-Low | 54 |

| Speed-Fast Acc-High | 78 |

Conclusion

What about the initial shift ?

Right, I almost forgot about that. See below.

Results

Ploting the 3D displacement for each tested conditions from the preivous data.

We observe a single floating point that corresponds to the displacement between Frame 0 and Frame 1. This leads me to hypothesize that the discrepancy may be related to the stage's dual motors, each controlling a separate axis (X and Y). Each motor operates in two directions (Positive and Negative). Since the shift occurs only at the first frame, this likely relates to how the experiment is initiated.

To explore this further, I decided to test whether these variables significantly impact the repositioning. We followed the XYZ repositioning dispersion protocol, testing the following parameters:

- Distance: 1000 µm

- Speed: 100%

- Acceleration: 100%

- Axis: X, Y, XY

- Starting Point: Centered (on target), Positive (shifted positively from the reference position), Negative (shifted negatively from the reference position)

- For each condition, three datasets were acquired.

Data XYZ_Repositining_Diagnostic_Data.xlsx was processed as mentioned before and we ploted the 2D displacement function of the frame for each condition.

When moving along the X-axis only, we observe a shift in displacement when the starting position is either centered or positively shifted, but no shift occurs when the starting position is negatively shifted. This suggests that the behavior of the stage’s motor or the initialization of the experiment may be affected by the direction of the shift relative to the reference position, specifically when moving in the positive direction.

When moving along the Y-axis only, we observe a shift in displacement when the starting position is positively shifted, but no shift occurs when the starting position is either centered or negatively shifted. This indicates that the stage's motor behavior or initialization may be influenced by the direction of the shift, particularly when starting from a positive offset relative to the reference position.

When moving along both the X and Y axes simultaneously, a shift is observed when the starting position is centered. This shift becomes more pronounced when the starting position is positively shifted in any combination of the X and Y axes (+X+Y, +X-Y, -X+Y). However, the shift is reduced when the starting position is negatively shifted along both axes.

Conclusion

Channel Co-Alignment

Acquisition protocol

Place 4 µm diameter fluorescent beads (TetraSpec Fluorescent Microspheres Size Kit, mounted on a slide) on the microscope stage.

Center an isolated bead under a high-NA dry objective.

- Crop the acquisition area to only visualize one bead but keep it large enough to anticipate a potential chromatic shift along the XY and Z axes

Acquire a multi-channel Z-stack

I usually acquire all available channels, with the understanding that no more than seven channels can be processed simultaneously. If you have more than 7 channels you can either split them into sets smaller or equal to 7 keeping one reference channel in all sets.

Acquire 3 datasets

- Repeat for each objective

Results

You should have acquired several multi-channel images that now need processing to yield meaningful results. To process them, use the Channel Co-registration analysis feature of the MetroloJ_QC plugin for FIJI. For more information about the MetroloJ_QC plugin please refer to manual available at the MontpellierRessourcesImagerie on GitHub.

- Open FIJI.

- Load your image by dragging it into the FIJI bar.

- Launch the MetroloJ_QC plugin by navigating to Plugins > MetroloJ QC.

- Click on Channel Co-registration Report.

- Enter a Title for your report.

- Type in your Name.

- Click Microscope Acquisition Parameters and enter:

- The imaging modality (Widefield, Confocal, Spinning-Disk, Multi-photon)

- The Numerical Aperture of the Objective used.

- The Refractive index of the imersion media. (Air: 1.0; Water 1.33; Oil: 1.515)

- The Emission Wavelength in nm for each channel.

Click OK

- Click Bead Detection Options

- Bead Detection Threshold: Legacy

- Center Method: Legacy Fit ellipses

Enable Apply Tolerance to the Report and Reject co-registration ratio above 1.

Click File Save Options.

Select Save Results as PDF Reports.

Select Save Results as spreadsheets.

- Select Save result images.

- Select Open individual pdf report(s).

Click OK.

Repeat steps 4 through 16 for each image you have acquired

Good to know: Settings are preserved between Channel Co-alignment sessions. You may want to process images with the same objective and same channels together.

This will generate detailed results stored in a folder named Processed. The Processed folder will be located in the same directory as the original images, with each report and result saved in its own sub-folder.

Here is a script to process channel co-alignment results generated by MetroloJ_QC Process Ch Co-registration Results.R.

To use it, simply drag and drop the file into the R interface. You may also open it with RStudio and Click the Source button. The script will:

- Prompt the user to select an input folder.

- Load all _results.xls files located in the selected folder and subfolder

- Process and merge all results generated by the MetroloJ_QC Channel Co-registration.

- Split the filenames using the _ character as a separator and create columns named Variable-001, Variable-002, etc. This will help in organizing the data if the filenames are formatted as Variable-A_Variable-B, for example.

- Save the result as Channel_Co-registration_Merged-Data.csv on an Output folder on the user's Desktop

# Clear the workspace

rm(list = ls())

# Ensure required packages are installed and loaded

if (!require(tcltk)) install.packages("tcltk", dependencies = TRUE)

library(tcltk)

if (!require(reshape2)) install.packages("reshape2", dependencies = TRUE)

library(reshape2)

# Clear the workspace

rm(list = ls())

# Set default input and output directories

default_input_dir <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Input") # Default to "Input" on Desktop

InputFolder <- default_input_dir # Use default folder

InputFolder <- tclvalue(tkchooseDirectory(initialdir = default_input_dir, title = "Select a folder containing XLS files"))

OutputFolder <- file.path(Sys.getenv("USERPROFILE"), "Desktop", "Output")

if (!dir.exists(OutputFolder)) dir.create(OutputFolder, recursive = TRUE)

# List all XLS files with "_results.xls" in the folder and subfolders

xls_files <- list.files(path = InputFolder, pattern = "_results.xls$", full.names = TRUE, recursive = TRUE)

if (length(xls_files) == 0) stop("No _results.xls files found in the selected directory.")

# Initialize lists for storing data

ratio_data_tables <- list()

pixel_shift_tables <- list()

# Process each XLS file

for (file in xls_files) {

lines <- readLines(file, encoding = "latin1")

# Extract "Ratios" data

ratios_line_index <- grep("Ratios", lines)

if (length(ratios_line_index) == 0) {

cat("No 'Ratios' line found in file:", file, "\n")

next

}

start_row <- ratios_line_index[1] + 1

relevant_lines <- lines[start_row:length(lines)]

channel_start_index <- which(grepl("^Channel", relevant_lines))[1]

if (is.na(channel_start_index)) {

cat("No rows starting with 'Channel' found after 'Ratios' in file:", file, "\n")

next

}

contiguous_rows <- relevant_lines[channel_start_index]

for (i in (channel_start_index + 1):length(relevant_lines)) {

if (grepl("^Channel", relevant_lines[i])) {

contiguous_rows <- c(contiguous_rows, relevant_lines[i])

} else {

break

}

}

if (length(contiguous_rows) > 0) {

# Read the ratio_data table from contiguous rows

ratio_data <- read.table(text = paste(contiguous_rows, collapse = "\n"), sep = "\t", header = FALSE, stringsAsFactors = FALSE)

# Extract channel names and matrix of values

channels <- ratio_data$V1

values <- ratio_data[, -1]

colnames(values) <- channels

rownames(values) <- channels

# Melt the matrix into a long-format table

ratio_data_formatted <- melt(as.matrix(values), varnames = c("Channel_1", "Channel_2"), value.name = "Ratio")

ratio_data_formatted$Pairing <- paste(ratio_data_formatted$Channel_1, "x", ratio_data_formatted$Channel_2)

# Add filename and split into parts

filename_no_extension <- tools::file_path_sans_ext(basename(file))

ratio_data_formatted$Filename <- filename_no_extension

filename_parts <- strsplit(ratio_data_formatted$Filename, "_")

max_parts <- max(sapply(filename_parts, length))

for (i in 1:max_parts) {

new_column_name <- paste0("Variable-", sprintf("%03d", i))

ratio_data_formatted[[new_column_name]] <- sapply(filename_parts, function(x) ifelse(length(x) >= i, x[i], NA))

}

# Add channel name pairings

channel_names <- unlist(strsplit(ratio_data_formatted$`Variable-005`[1], "-"))

ratio_data_formatted$Channel_Name_Pairing <- apply(ratio_data_formatted, 1, function(row) {

ch1_index <- as.integer(gsub("Channel ", "", row["Channel_1"])) + 1

ch2_index <- as.integer(gsub("Channel ", "", row["Channel_2"])) + 1

paste(channel_names[ch1_index], "x", channel_names[ch2_index])

})

# Filter columns for ratio_data: Channel_1, Channel_2, Channel_Pairing, Ratio, Filename, Variable-001, Variable-002 ..., Channel_Name_Pairing

ratio_data_formatted <- ratio_data_formatted[, c("Channel_1", "Channel_2", "Pairing", "Ratio", "Filename",

paste0("Variable-", sprintf("%03d", 1:7)), "Channel_Name_Pairing")]

ratio_data_tables[[basename(file)]] <- ratio_data_formatted

} else {

cat("No contiguous 'Channel' rows found to convert into a data frame.\n")

}

# Extract "Pixel Shifts" data

pixelShifts_line_index <- grep("pixelShifts", lines)

if (length(pixelShifts_line_index) > 0) {

start_row_pixel_shifts <- pixelShifts_line_index[1] + 1

relevant_pixel_shifts_lines <- lines[start_row_pixel_shifts:length(lines)]

pixel_shift_rows <- character(0)

for (line in relevant_pixel_shifts_lines) {

columns <- strsplit(line, "\t")[[1]]

if (length(columns) >= 2 && grepl("shift$", columns[2])) {

pixel_shift_rows <- c(pixel_shift_rows, line)

}

}

if (length(pixel_shift_rows) > 0) {

pixel_shift_data <- read.table(text = paste(pixel_shift_rows, collapse = "\n"), sep = "\t", header = FALSE, stringsAsFactors = FALSE)

# Set the first column name to "Channel"

colnames(pixel_shift_data)[1] <- "Channel"

# Extract unique channel names from the `Channel` column

# Filter out empty strings and get unique channel values

unique_channels <- unique(pixel_shift_data$Channel[pixel_shift_data$Channel != ""])

# Update column names: Keep "Channel" and "Axis", then use `unique_channels` for the rest

new_colnames <- c("Channel", "Axis", unique_channels)

# Update the column names of the data frame

colnames(pixel_shift_data) <- new_colnames

for (i in 2:nrow(pixel_shift_data)) {

if (is.na(pixel_shift_data$Channel[i]) || pixel_shift_data$Channel[i] == "") {

pixel_shift_data$Channel[i] <- pixel_shift_data$Channel[i - 1]

}

}

# Melt the pixel_shift_data into long format

pixel_shift_long <- melt(pixel_shift_data, id.vars = c("Channel", "Axis"), variable.name = "Channel_2", value.name = "Pixel_Shift")

colnames(pixel_shift_long)[1]<-"Channel_1"

# Create the Channel Pairing column

pixel_shift_long$Pairing <- paste(pixel_shift_long$Channel_1, "x", pixel_shift_long$Channel_2)

# Filter data into separate X, Y, Z based on Axis

x_shifts <- subset(pixel_shift_long, Axis == "X shift", select = c(Pairing, Pixel_Shift))

y_shifts <- subset(pixel_shift_long, Axis == "Y shift", select = c(Pairing, Pixel_Shift))

z_shifts <- subset(pixel_shift_long, Axis == "Z shift", select = c(Pairing, Pixel_Shift))

# Rename Pixel_Shift columns for clarity

colnames(x_shifts)[2] <- "X"

colnames(y_shifts)[2] <- "Y"

colnames(z_shifts)[2] <- "Z"

# Merge X, Y, Z shifts into a single data frame

pixel_shift_data_formatted <- Reduce(function(x, y) merge(x, y, by = "Pairing", all = TRUE),

list(x_shifts, y_shifts, z_shifts))

pixel_shift_data_formatted$Filename <- filename_no_extension

for (i in 1:max_parts) {

new_column_name <- paste0("Variable-", sprintf("%03d", i))

pixel_shift_data_formatted[[new_column_name]] <- sapply(filename_parts, function(x) ifelse(length(x) >= i, x[i], NA))

}

# Split Variable-005 into individual channel names

channel_names <- strsplit(pixel_shift_data_formatted$`Variable-005`, split = "-")

# Create a function to match channel names to Channel_Pairing

get_channel_pairing_name <- function(pairing, names_list) {

# Extract the channel numbers from the pairing